Common Reputation Models

Introduction

Now we're going to start putting our reputation primitives to work. Let's look at some actual reputation models to understand how the claims, inputs & processes described in the last chapter can be combined to track an entity's reputation.

In this chapter, we'll name and describe a number of simple broadly deployed reputation models: Vote to Promote, Simple Ratings, Points, etc. These models should be familiar to you - they're the bread-and-butter of today's social Web. Understanding a little bit about how these models are constructed should help you identify them when you see them in the wild. And thinking about the design behind these models will become critical in the latter half of this book, when we start to cover the design and architecture of your own reputation models.

Simple Models

At their very simplest, some of the models we present below are really no more than fancified reputation primitives: counters, accumulators and the like. In Chap_5-Combining_the_Simple_Models we'll show you how to combine and expand on these simple models to make some more-realistic, applied, real-world models.

It's important to note, however, that just because these models are simple does not mean that they're not imminently useful. Variations on Favorites & Flags, Voting, Ratings & Reviews and Karma are abundant on the Web, and many sites find that, at least in the beginning

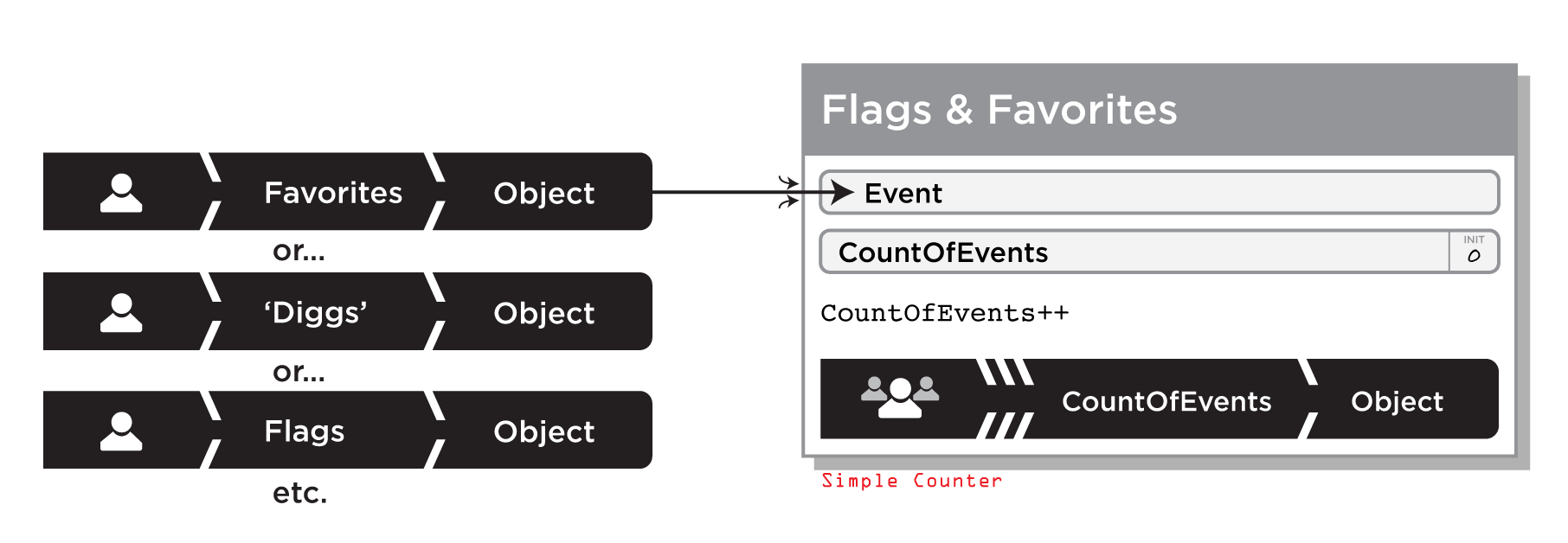

Favorites and Flags

This is a model that excels at identifying outliers in a collection of entities. These outliers may be exceptional either for their perceived quality, or for their lack of same. The general idea is this: provide your community some controls for identifying or calling attention to items of exceptional quality (or exceptionally low quality.)

These controls may take the form of explicit votes for a reputable entity, or they may be more subtle implicit indicators of quality (such as a user bookmarking a piece of content, or sending to a friend.) Keep a count of the number of times these controls are accessed-this forms the initial inputs into the system, and the reputations for the entities are tabulated by keeping count of these events.

In their simplest form, Favorites & Flags reputation models can be implemented as simple counters. (When you start to combine them into more complex models, you'll probably need the additional flexibility of a reversible counter.)

Vote to Promote

This Favorites & Flags variant has been popularized by crowd-sourced news sites such as Digg.com, Reddit, and Yahoo! Buzz. In a vote-to-promote system, the user wants to promote a particular piece of content in a community pool of submissions. This promotion takes the form of a vote for that item, and items with more votes rise in the rankings to be displayed with more prominence.

Favorites

Counting the number of times that members of your community 'favorite' something can be a powerful method for tabulating content reputation. This method provides a primary value (See Chap_7-Sidebar_Provide_a_Primary_Value ) to the user: favoriting an item gives the user persistent access to it, and the ability to save/store/retrieve it later. And, of course, it provides a secondary value to the reputation system as well.

Report Abuse

You hate to have to deal with it, but-unfortunately-monitoring and policing abusive content will be a significant function of your reputation system. Practically speaking, 'Flagging' bad content is not that far removed from 'Favoriting' the good stuff. The most basic type of reputation model for abuse moderation involves keeping track of the number of times the community has flagged something as abusive. Typically, once a certain threshold is reached, either your application or human agents (staff) will act upon the content accordingly.

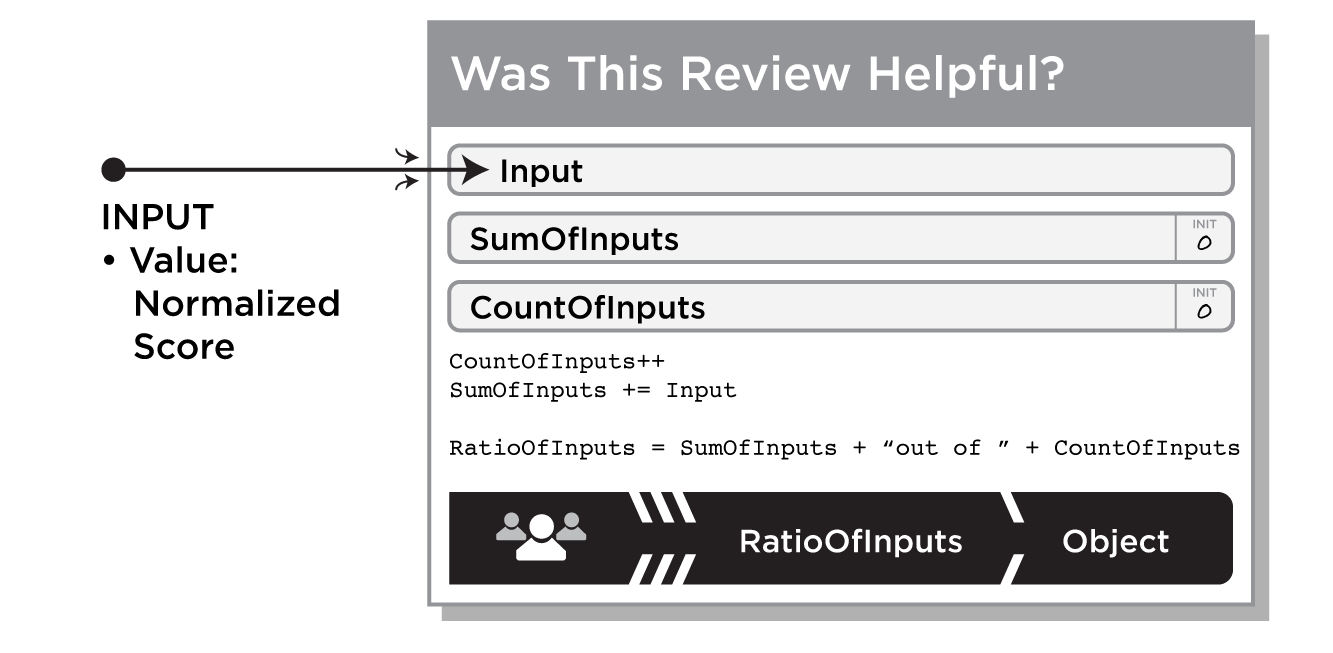

Voting

If you give your users a choice of one of multiple options or opinions to express about something, then you are giving them a vote. A very common use for this model (and you'll see it referenced throughout this book) is to provide community members the ability to vote for something's usefulness. (Or accuracy. Or appeal.) Often, in these cases, it is more convenient to store that reputation statement back as a part of the reputable entity that it applies to: this makes it easier to fetch and display a Review's 'Helpful Score' for instance. (See Figure_2-7 .)

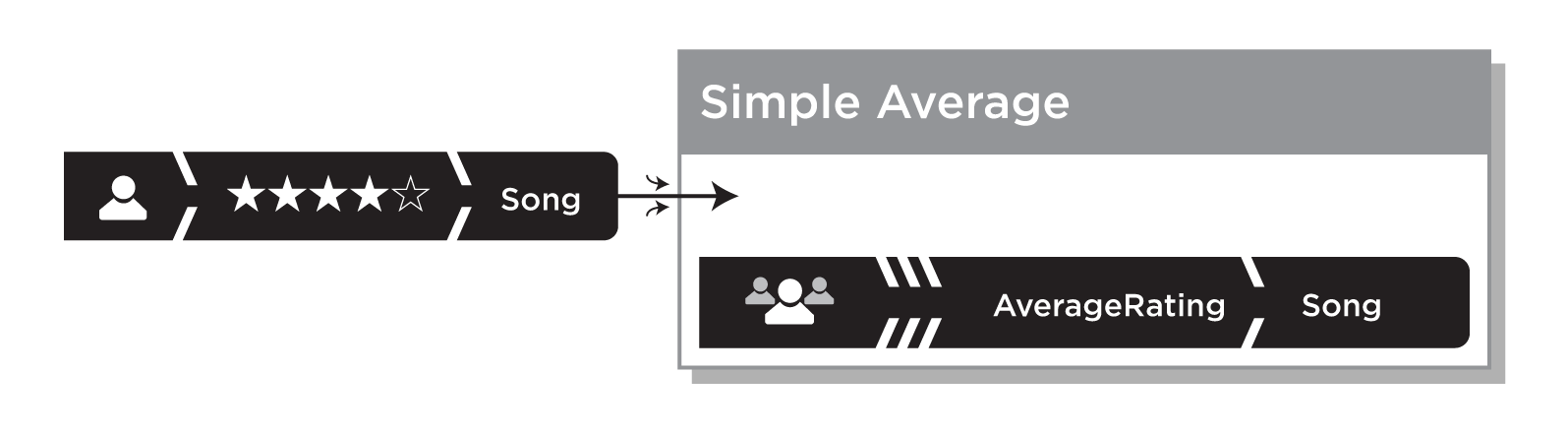

Ratings

When you give your users the ability to express an explicit opinion about the quality of something, you typically do so through the use of a Ratings model. There are a number of different scalar-value ratings: Stars, Bars, HotOrNot or a 10-point scale. (We'll discuss how to select the right one for your needs in Chap_7-Choosing_Your_Inputs .) These are gathered from multiple individual users, and rolled up as a Community Average score for that object.

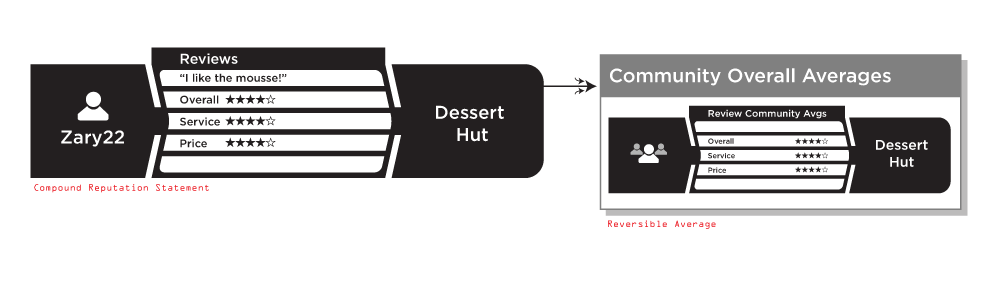

Reviews

Some ratings are most effective when they travel together. More-complex reputable entities frequently require more-nuanced reputation models, and the Ratings & Review model allows for users to express a variety of reactions to an object. While each rated facet could be stored and evaluated as its own specific reputation, semantically that wouldn't make much sense-it is the Review, in toto, that is the primary unit of interest.

In the Reviews model, the user expresses a series of ratings & provides one or more free-form text opinions. Each individual facet of a review feeds into a Community Average.

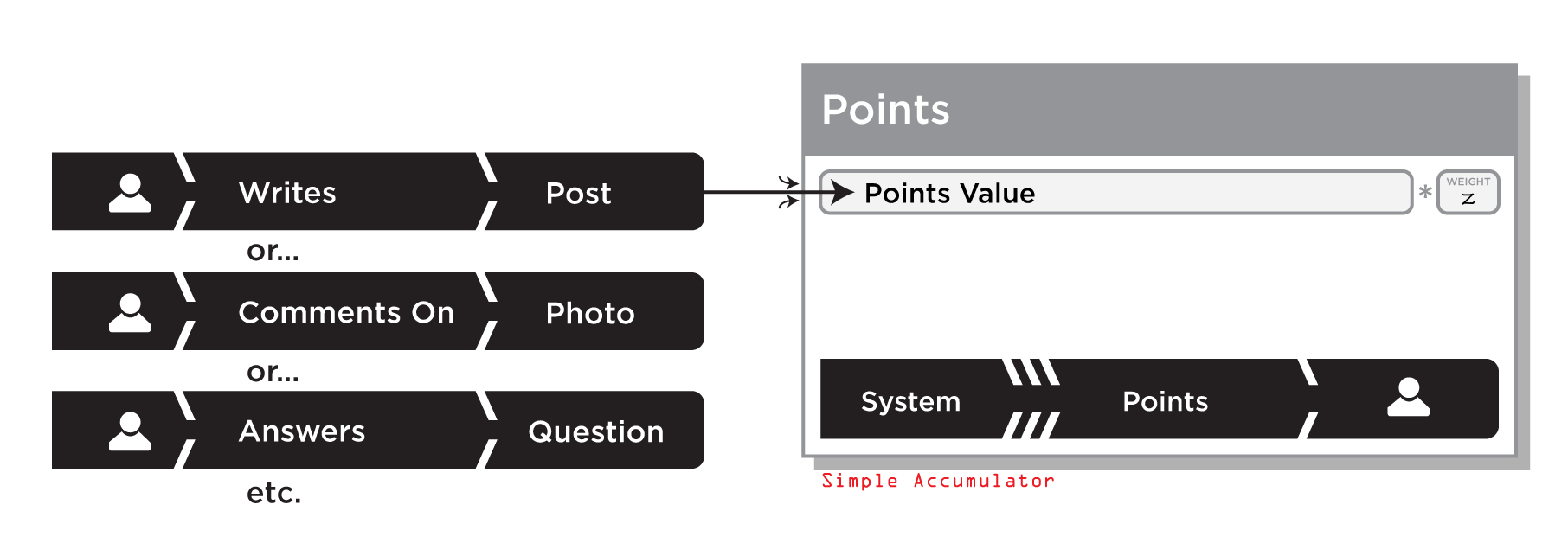

Points

There are times when you may want a very specific and granular accounting of user activity on your site. The Points model provides just such a facility. With points, your system counts up the hits, actions, and other activities that your users engage in, and keeps a running sum of the awards.

This is a tricky model to get right. In particular, you face two dangers:

- Tying inputs to point values almost forces a certain amount of transparency onto your system. It is hard to reward activities with points without also communicating to your users what those relative point values are. (See Chap_5-Keep_Your_Barn_Door_Closed .)

- You risk unduly influencing certain behaviors over others: users (some of them, at least) will almost certainly make points-based decisions about which actions they'll take.

Understand that there are significant differences between points awarded for reputation purposes, and monetary points that you may dole out to users as currency. The two are frequently confounded, but reputation points should not be spendable.

If users must actually surrender part of their own intrinsic value in order to obtain goods or services, then you will be punishing your best users and your system will quickly lose track of peoples relative 'worths.' Who are those users who are actually valued and valuable contributors? And who is just a good 'hoarder' and never spends the points allotted to them?

Far better to link the two systems, but allow them to remain independant of each other: a currency system for your game or site should be an orthogonal one to your reputation system. Regardless of how much currency exchanges hands in your community, users underlying intrinsic karma should be allowed to grow or decay uninhibited by the demands of commerce.

Karma

Karma is reputation for users. In chapter 2, Chap_2-Mixing_Models_adding_Karma , explained that karma is usually used in support of other reputation models to track or create incentives for user behavior. All of the complex examples later in this chapter (Chap_5-Combining_the_Simple_Models ) generate and/or utilize karma to help calculate a quality score for other purposes, such as search ranking, content highlighting, or deciding who's the most reputable provider.

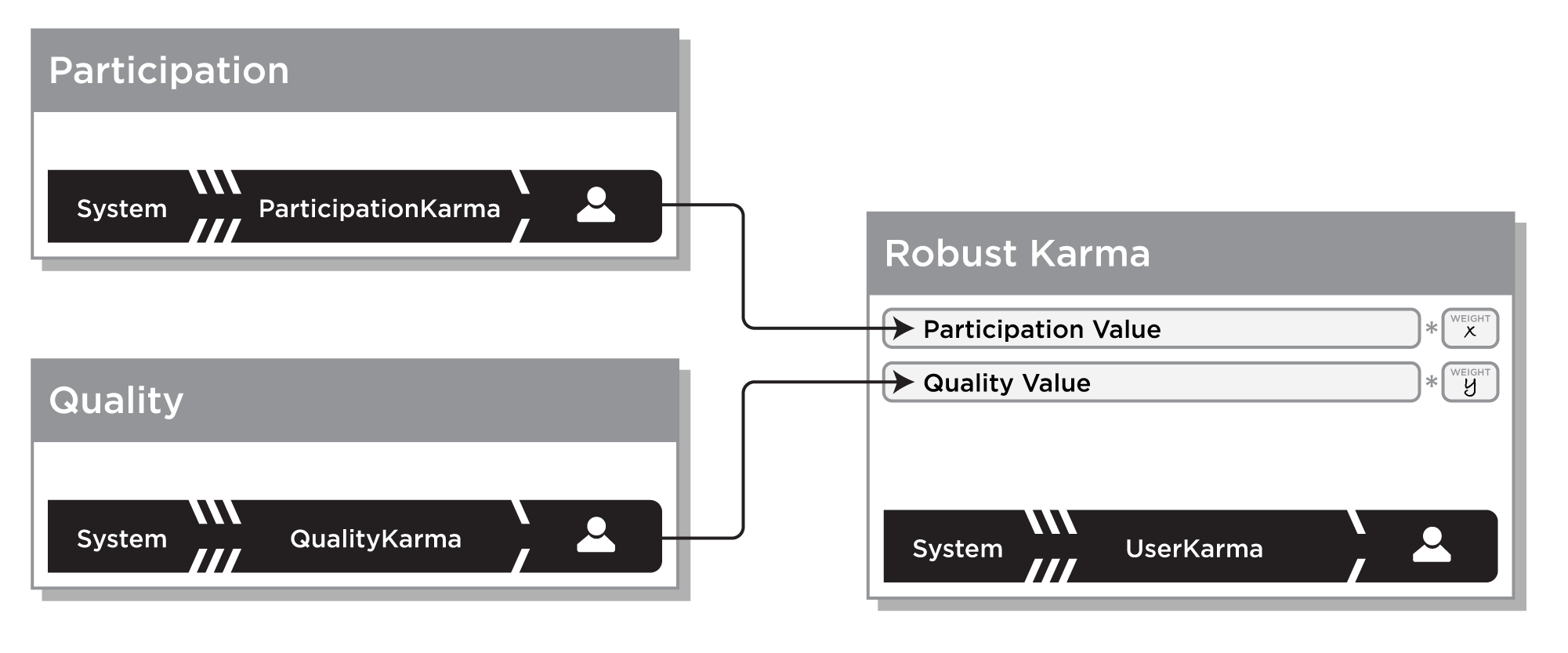

There are two primitive forms of karma those that measure the amount of user Participation and those that measure the Quality of contributions. When these types of karma are combined, we refer to the model as robust: by including both types of measures the highest scoring users are both active and producing the best content.

Participation Karma

Counting socially and/or commercially significant events by content creators is probably the most commonly seen type of Participation Karma. Often this is implemented as a point system (Chap_5-Points - each action is worth a fixed number of points and they accumulate. The model for this karma looks exactly like Figure_5-5 , where the input event represents the number of points for the action and the Source of the activity becomes the Target of the karma.

There is also Negative Participation Karma, which counts how many bad things a user does. Some people call this Strikes, in reference to the three-strikes rule of American Baseball. Again the model is the same, only the interpretation of a high score is inverted by the application.

Quality Karma

Quality Karma models, such as eBay's Merchant Feedback Chap_5-eBay_Merchant_Feedback_Karma are concerned solely with the quality of contributions by the user. In these cases, the number of contributions is meaningless without understanding if they were good or bad for business. The best Quality Karma scores are always calculated as a side effect of other users evaluating the contributions of the target. In the eBay example, a successful auction bid is the subject of the evaluation and the results roll-up to the merchant. No transaction, no evaluation. For a detailed discussion of this requirement, see Karma is Complex, Chap_8-Displaying_Karma . Look ahead to Figure_5-W for the diagram of Ratings and Reviews with Karma for and illustration of this model.

Robust Karma

When needed, the Quality Karma and Participation Karma can be mixed into one score representing the combined value of this user's contributions. Each application decides how much weight each component gets in the final calculation. Often these Quality Karma are not displayed to users but only used for internal ranking for highlighting or attention and as search ranking influence factors, see Chap_5-Keep_Your_Barn_Door_Closed later this chapter for common reasons for this secrecy. But even when displayed, robust karma has the advantage of encouraging users to both the best stuff (as evaluated by their peers) and to do it often.

When negative factors are mixed into robust karma, it is particularly useful for customer care staff - both to highlight users that have become abusive or are decreasing content value, and to potentially provide an increased level of service in the case of a service event. This karma helps find the best of the best and the worst of the worst.

Combining the Simple Models

The simple patterns earlier in this chapter are not enough alone to demonstrate a typical deployed large-scale reputation system in action. Just as the Ratings and Reviews pattern is build out of recombination of the simple processes from Chapter_4 , most reputation model combine and multiple reputation patterns into one complex system.

We present these models for understanding, not for wholesale copying. If we impart one message from this book, we hope it is this: reputation is highly contextual, and what works well in one context will almost inevitably fail in many others. Trying to copy any one of these models (Flickr, Ebay or Yahoo! Movies) too closely would be stupid! Because…

- These models are inextricably linked to the applications that embed them. Every input is tied to a specific application feature (which itself depends on a specific object model and set of interactions.)

- So to truly copy these approaches, you'll need to wholesale-copy large parts of the interfaces and business logic from the applications that embed them. (Now that doesn't sound so bright, does it?)

- The entire second half of this book attempts to teach you how to design a system specific to your product and context. Trust us-you'll see better results for your application if you learn from these models, and then set them aside.

User Reviews with Karma

Probably the most common reason a simple reputation model, such as Ratings and Reviews, eventually becomes more complex is that as the application becomes increasingly successful, it becomes obvious that some users are better at producing high quality reviews which significantly increase the value of the site to end users and the companies bottom line. So, the company wants to find ways to recognise these contributors, increase the search-rank value of their reviews, and generally provide incentives for this value-generating behavior. Adding a Karma reputation model to their system is a common approach.

The simplest way to introduce a quality karma score to a simple Ratings and Reviews reputation system is to leverage the “Was this Review helpful?” feedback already gathered on each review, and that is what we're illustrating here.

The example shown in Figure_5-W is for a hypothetical product reputation model and so the reviews focus on 5-star ratings in the categories of Overall , Service and Price . These specifics are for illustration only and are not critical to the design. This model could just as well be used with thumb ratings and any arbitrary categories like Sound Quality or Texture.

The Ratings and Reviews with Karma model has one compound input: the Review and the Helpful Vote and from these the Community Rating Averages, Was this Helpful? Ratio, and Reviewer Quality Karma are generated on the fly. Careful attention should be paid to the sources and targets of the inputs of this model - they are not the same users nor are they talking about the same entities.

- The Review is a compound reputation statement of claims related by a single source user (the reviewer) about a particular target entity, such as a business or a product:

- Each review contains a text-only Comment, typically of a limited length and is often required to pass simple quality tests such as minimum size and spell checking before being submitted.

- The user must provide an Overall rating of the target, in this example in the form of a 5-star rating, though it could be in any application appropriate scale.

- Optional Service and/or Price ratings can be provided by users wishing to provide additional detail about the target. A reputation system designer might encourage the contribution of these optional scores by providing an increase in the user's Reviewer Quality Karma (not reflected in the diagram.)

- The last claim included in the compound Review reputation statement is the Was this Helpful? Ratio claim which is initialized to

0 out of 0, and is never actually modified by the reviewer, but instead by the Helpful Votes of readers.

- The Helpful Vote is not input by the reviewer, but a user (the reader) that encounters the review much later. The readers evaluate the review itself typically by clicking one of two icons:

thumb-uporthumb-downin response to the prompt“Did you find this review helpful?”, but other schemes such as the singular“I liked this!”are common as well.

This model has only three processes/outputs and is pretty straightforward, the only element that may be unfamiliar at this point is the split shown for the Helpful Vote where the message is duplicated and sent both to the Was this Helpful? process and the process that calculates Reviewer Quality Karma. This kind of split is common as reputation models become more complex. Other than indicating that the same input is used in multiple places, a split also offers the opportunity to do parallel and/or distributed processing - the two duplicate messages take separate paths and need not finish at the same time, or at all.

- The Community Rating Averages represent the average of all the component ratings in the reviews. The Overall, Service, and Price claims are averaged. Since some of these are optional, keep in mind that each claim type may have a different total count of submitted claim values.

Since users may need to revise their ratings and the company may wish to cancel the effects of ratings by spammers and other abusive behavior, the effects of each review are reversible. Since this is a simple reversible average process, it would be good to consider the effects of bias and liquidity when calculating and displaying these averages (See Chap_4-Craftsman_Tips ).

- The Was this Review Helpful? process is a reversible ratio, keeping track of the total (

T) number of votes and the count of positive (P) votes. It stores the output claim in the target review as the Was this Helpful? Ratio claim with the value“P out of T”.

There is inconsistent policies around what should happen when a reviewer is allowed to make significant changes to their review, such as changing a formerly glowing comment into a terse “This Sucks Now!” Many sites simply revert all of the Helpful Votes and reset the ratio. Even if your model doesn't permit edits to a review, for abuse mitigation purposes, this process still needs to be reversible.

- After a simple point accumulation model our Reviewer Quality Karma is probably the simplest karma model possible: track the ratio of total Helpful Votes for all of the reviews this user has written to the total number of votes received. We've labeled this a Custom Ratio because we assume that the application will want to include certain features in the calculation, such as requiring a minimum number of votes before considering any display of karma to an users. Likewise, it is typical to create a non-linear scale when grouping users into karma display formats, such as badges like

“Top 100 Reviewer”. See the Chap_5-eBay_Merchant_Feedback_Karma model and Chapter_8 for more on display patterns for karma.

Karma, especially public karma, is subject to massive abuse by users interested in personal status or commercial gain. As such, this process must be reversible. Now that we have a community generated quality karma claim for each user (at least those that have written a review noteworthy enough to invite helpful votes) you might notice that this model doesn't use that score as an input or weight in calculating other scores. This is a reminder that reputation models all exist within an application context - perhaps the site will use it as a corporate karma, helping to determine which users should get escalating customer support. Perhaps the score will be public, displayed next to every one of the user's reviews as a status symbol for all to see. It might even be shared only with the reviewer in question, so they can see what the community thinks of their contributions overall. Each of these choices has different ramifications, which we discuss in Chapter_7 in detail.

eBay Merchant Feedback Karma

eBay contains the internet's most well-known and studied user reptuation/karma system: Merchant Feedback. Their reputation model, like most others that are several years old, is complex and continuously adapting to new business goals, changing regulations, improved understanding their customer's needs and the never ending need to combat reputation manipulation through abuse. See the Appendix for a brief survey of relevant research papers about this system and Chapter_11 for further discussion of the continuous evolution of reputation systems in general.

Rather than detail the entire Feedback Karma model here, we're going to focus on just the claims that are from the buyer and about the seller. An important note when talking about eBay feedback is that the buyer claims are in a specific context: A market transaction - a successful bid at auction for an item listed by the seller. This leads to a generally higher-quality karma score for sellers than they would get if anyone could just walk up and rate a seller without even demonstrating that they'd ever done business with them, see Chap_1-implicit_reputation

This example reputation model was derived from the following eBay pages: http://pages.ebay.com/help/feedback/scores-reputation.htmland http://pages.ebay.com/services/buyandsell/welcome.html, and was current as of July 2009. It has been simplified for illustration, specifically by omitting the processing required to handle the requirement that only buyer feedback and DSR ratings that were provided over the previous 12 months are considered when calculating the Positive Feedback Ratio, DSR Community Averages, and by extension, Power Seller status. Also, eBay reports user feedback counters for the last month and quarter which we are omitting here for the sake of clarity. Abuse mitigation features, which are not publicly available, are also excluded.

Figure_5-X illustrates the Merchant Feedback karma reputation model, which is made out of typical model components: two compound buyer input claims - Seller Feedback and Detailed Seller Ratings- and several rollups of the merchant/seller's karma: Community Feedback Ratings (a counter), Feedback Level (a named level), Positive Feedback Percentage (a ratio), and the Power Seller Rating (a label.)

The context for the buyer's claims is a transaction identifier - the buyer may not leave any feedback unless they have successfully placed a winning bid on an item listed by the seller in the auction market. Presumably the feedback will be primarily concerned with the quality of and delivery of the good(s) purchased. There are two different sets of complex claims a buyer may provide, and the limits on each vary:

- Typically, when a buyer wins an auction, the delivery phase of the transaction starts and the seller is motivated to deliver the goods in a timely manner an in as-advertised quality. After either a timer expires or the goods have been delivered, the buyer is encouraged to leave Seller Feedback, a compound claim in the form of a 3-level rating:

Positive,Neutral, orNegativeand a short text-only comment about the seller and/or transaction. The ratings make up the main component of Merchant Feedback karma. - Once per week that a buyer has a transaction with seller, they may leave Detailed Seller Ratings, a compound claim of four separate 5-star ratings in these categories:

Item as Described,Communications,Shipping Time, andShipping & Handling Charges.Other than being aggregated for community averages, these rating are only used a component to decide if the seller qualifies as a Power Seller.

Though the context for the buyer's claims is a single transaction (or history of transactions), the context for the aggregate reputations that are being generated is trust in the eBay marketplace: If the buyers can't trust the sellers to deliver against their promises, eBay has no business. When considering the rollups, we transform the single-transaction claims into trust in the seller, and by extension, trust rolls up into eBay. This chain-of-trust is why eBay must continuously update it's interface and reputation systems.

eBay exposes a rather extensive set of karma scores for merchants, some may not be obvious such as the amount of time the seller has been a member of eBay as well as color-coded stars, percentages, more than a dozen numbers, two tables, and lists of testimonial comments. For this model, almost every process box represents a claim that is displayed as a public reputation to everyone, so we'll simply detail each output claim separately.

- The Feedback Score counts every

Positiverating given by a buyer as part of Seller Feedback a compound claim associated with a single transaction. This number is cumulative for the lifetime of the account, and generally loses it's value over time - buyers only tend to notice if it has a low value.

It is fairly common for a buyer to change this score, with some time limitations, so this effect must be reversible. Sellers spend a large amount of time and effort working to change Negative and Neutral ratings to Positive in order not to lose their Power Seller Rating.

When this score changes, it is then used to calculate the Feedback Level.

- The Feedback Level claim is a graphical representation of the Feedback Score as a colored star icon. Most likely a simple data transformation and normalization process, we've represented it as a mapping table here, with only a small subset of the mappings illustrated. Presumably advanced users know if a red shooting star is better than a purple star, but we have our doubts about the utility of this representation for buyers. Iconic scores such of these often mean more to their owners, and might represent only a slight incentive/reward for increasing activity in an environment where each successful interaction equals cash in your pocket.

- The Community Feedback Ratings is a compound claim containing the historical counts for each of the three possible Seller Feedback ratings:

Positive,Neutral, andNegative, over the last 12 months so that the totals can be presented in a table showing the results for the last month, 6 months, and year. Older ratings are decayed continuously, though eBay is not specific how often this data is updated if new ratings don't arrive. One performant possibility would be to update the data whenever the seller posts a new item for sale.

The Positive and Negative ratings are used to calculate the Positive Feedback Percentage.

- The Positive Feedback Percentage claim is calculated by dividing the

Positiveby thePositiveplusNegativefeedback ratings over the last 12 months. Note that theNeutralratings are not included in the calculation. This is a recent change reflecting some confidence by eBay that reputation systems updates deployed in the summer of 2008 to prevent bad merchants from using retaliatory ratings against buyers who are unhappy with a transaction (known as tit-for-tat-negatives) have been successful. Initially this calculation included Neutrals as they feared that negative feedback would transform to neutral ratings. It did not.

This score is a component of the Power Seller Rating, meaning that every individual positive and negative rating is important to almost every single reputation score at eBay.

- The DSR Community Averages are simple reversible averages for each of the four ratings categories:

Item as Described,Communications,Shipping Time, andShipping & Handling Charges.There is a limit to how often a buyer may contribute DSRs. These categories were recently added as a new reputation model to eBay in an attempt to improve the quality of merchant and buyer feedback overall. It was problematic having all of these factors mixed in with the overall Seller Feedback ratings, which effected everything else. Sellers could end up in too much trouble just because of a bad shipping company or a remote delivery. Likewise buyers were bidding low prices only to end up feeling gouged by shipping and handling charges. Fine grained feedback allowed one-off small problems to be averaged out in into the DSR Community Averages instead of translating to red-starNegativescores which poisoned trust overall. They also provide detailed actionable problem feedback to a seller that they are motivated to improve, since these scores make up half of the Power Seller Rating.

- The Power Seller Rating is a prestigious label that appears next to the seller's ID that signals the highest level of trust. It includes several factors external to this model, but two critical components are the Positive Feedback Percentage, which must be at least 98% and the DSR Community Averages, which each must be at least 4.5 stars (90%?) or greater. Interestingly, the DSR scores are more flexible than the feedback average, keeping more pressure on the overall evaluation of the transaction rather than the related details.

Flickr Interestingness Scores for Content Quality

The popular online photo service Flickr uses reputation to qualify new user submissions, track user behavior that violates their Terms of Service and, most notably, utilizes a completely custom reputation model called Interestingness for identifying the highest quality photographs submitted from the millions uploaded every week. This reputation score is used to rank photos by user and when searched for by tag. But the fans of the site know that Interestingness is the key to Flickr's Explore pagewhich displays a calendar of the most Interesting photos of the day, and users may use a graphical calendar to look back at the worthy photographs from any previous day. It's like a daily leaderboard for newly uploaded content.

The version of Flickr Interestingness we are presenting here is an abstraction based on the patent application number US 2006/0242139 A1, feedback that Flickr staff has given on their own messageboards, observations by power users, and the author's own experiences building such reputation systems. As with all the models presented here, some liberties have been taking to simplify the model for presentation. Specifically, the patent mentions various weights and ceilings for the various calculations without actually prescribing any particular values for these factors. Likewise, we leave the specific calculations out, though when building such systems we have two pieces advice: There is no substitute for gathering historical data when deciding how to clip and weight your calculations, and even if you get your initial settings correct, you will need to adjust them over time to adapt to the use patterns that will emerge as the direct result of implementing reputation!

Figure_5-Y has two primary outputs: Photo Interestingness and Interesting Photographer Karma, and everything else feeds into those two critical claims. Of special note in this model is the existence of a feedback loop, represented by a [dashed-pipe?]: A users reputation score is used to weight his opinions of another user's work more highly than that of a user that has no Interesting photos. According to the current user pattern in Flickr each day generates and stores a list of top 500 the Interestingness-scoring photos for the Explore page, as well as updating each and every photo's current Interestingness score each time one of the input events occurs. Here we illustrate a real-time model for the latter, though it isn't at all clear that Flickr actually does these calculations in real time, and there are several good reasons to consider delaying this action. See Chap_5-Keep_Your_Barn_Door_Closed later in this chapter.

Since there are four main paths through the model, we've grouped all the inputs by the kind of reputation feedback they represent: Viewer Activities, Tagging, Flagging, and Republishing. Each of these paths provide a different kind of input into the final reputations.

- Viewer Activities represent actions that the viewing user takes when interacting with the photo and are presumed to be primarily expressing personal interest in the photo's content. These are all considered a considerable endorsement of the photo, because they require special actions by the user. We assume all of these have equal weight, but that is not a requirement of the model.

- A viewer can Add a Note to the image in the form of adding a rectangle over a region of the photo and adding a short notation.

- When a viewer Comments On an image, they leave a text message (limited HTML formatting is supported, such as thumbnails of other Flickr photos) for all other viewers to see. The first comment is usually the most important, as it encourages other viewers to join the conversation. It is not known if Flickr weights the first comment more highly than others.

- When a viewer clicks the Add to Favorites icon, they are not only endorsing the photo, they are sharing that endorsement via their My Favorites page that is a part of their profile.

- Downloads of the various sizes of the photo are also counted as viewer activities. It would probably be good to only count subsequent downloads of a single photo, even in different sizes, by the same user as only a single viewer action.

- Lastly, the viewer can Send to Friend which will create an email with a link to the photo for others to enjoy. It is true that the addressee(s) for this message could be a mailing list or a list of multiple users and thereby this action should be considered Republishing, it is generally not possible for the software to determine if the target is a list, so we assume for reputation purposes that it is always an individual.

- Tagging is the action of adding short text strings describing the photo for categorization. Flickr tags are the same as pre-generated categories, but exist in a folksonomy: Whatever the users tag a photo with is what it is about. Common tags include 2009, me, Randy, Bryce, Fluffy, and cameraphone along with the expected descriptive categories of wedding, dog, tree, landscape, purple, tall, and irony - which sometimes means “made of iron”! Tagging gets special treatment in the reputation model, it takes extra special effort for users to tag objects, and determining if one tag is more likely to be accurate than another requires some complicated computation. Likewise, certain tags, though popular should not be considered for reputation purposes at all.

Tags have their own quantitative contribution to Interestingness, but also are considered to be Viewer Activities so the input is split into both paths.

- Sadly, many popular photographs turn out to be pornographic or in violation of Flickr's Terms of Service. In many sites, porn tends to quickly generate a high quality reputation score, if left untended. Flagging is the process of users marking content as inappropriate for the service. This is a negative reputation vote, the user saying “This doesn't belong here” and decrease the Interestingness score faster than the other inputs can raise it.

- The Republishing actions represent when a user decides to increase the audience for this photo by either Adding it to a Flickr Group or Embedding it on a web page using either the blog publishing tools provided or by cut-and-pasting the HTML snippet provided. The patent doesn't specifically say that these two actions are treated similarly, but it seems reasonable to do so.

There are four reputation contribution rollups that get mixed into Photo Interestingness (represented by the four parallel paths in Figure_5-Y ): The Viewer Activity Score which represents the effect of viewers taking a specific action on a photo, Tag Relatedness which represents how similar this tag is to others associated with other tagged photos, the Negative Feedback adjustment which reflects reasons to downgrade or disqualify it, and Group Weighting which has an early positive effect on reputation with the first few events.

- The events coming into the Karma Weighting process are assumed to have normalized value of 0.5 since this process is likely to increase it. The process reads the Interesting Photographer Karma of the user that is taking the action (not of the person who owns the photo) and increases the viewer activity value by some weighting amount before passing it on to the next process. For the sake of a simple example, we'll suggest that the possible increase value increase will be 0.25 - with no effect for a viewer with no karma and 0.25 being for a hypothetical awesome user who's every photo is beloved by one and all. The resulting score will be in the range 0.5 to 0.75. We assume this interim value is not stored in a reputation statement for performance reasons.

- Next the Relationship Weighting process takes the input score (range 0.5 to 0.75) and determines the relationship strength of the viewer to the photographer. The patent indicates that a stronger relationship should grant a higher weight to any viewer activity. Again, for our simple example, we'll add up to 0.25 for a mutual first-degree relationship between the users. Lower values can be added for one-way (follower) relationships or even being members of the same Flickr Groups. The result is now in the range 0.5 to 1.0 and is ready to be added into the historical contributions for this photo.

- The Viewer Activity Score is a simple accumulator and custom denormalizer which will sum up all of the normalized event scores that have been weighted. In our example they arrive in the range 0.5 to 1.0. It seems likely that this score is the primary basis for Interestingness. The patent indicates that each sum is marked with a timestamp to track changes in Viewer Activity Score over time.

The sum is then denormalized against the available range 0.5 to the maximum known Viewer Activity Score to produce an output from 0.0 to 1.0 representing the normalized accumulated score is stored in the reputation system so that it can be used to recalculate Photo Interestingness as needed.

- Unlike most of the reputation messages we've considered so far, the incoming message to the Tagging process path does not include any numeric value at all - it only contains the text tag that the viewer is adding to the photo. The tag is first subject to the Tag Blacklist process, a Simple Evaluator that decides if a tag is on a list of forbidden words. If it is the flow is terminated for this event - there will be no contribution to Photo Interestingness for this tag. Separately it seems likely that Flickr would want to negatively effect the Karma score for the viewer, but that is not shown here. Otherwise the tag is considered worthy of further reputation consideration and is sent on to Tag Relatedness. Only if the tag was on the blacklist is it likely that any record of this process would be saved for future reference.

- The non-blacklisted tag then undergoes a process of Tag Relatedness, which is a custom computation of reputation based on cluster analysis described in the patent in this way:

Flickr Tag Relatedness

[0032] As part of the relatedness computation, the statistics engine may employ a statistical clustering analysis known in the art to determine the statistical proximity between metadata (e.g., tags), and to group the metadata and associated media objects according to corresponding cluster. For example, out of 10,000 images tagged with the word “Vancouver,” one statistical cluster within a threshold proximity level may include images also tagged with “Canada” and “British Columbia.” Another statistical cluster within the threshold proximity may instead be tagged with “Washington” and “space needle” along with “Vancouver.” Clustering analysis allows the statistics engine to associate “Vancouver” with both the “Vancouver-Canada” cluster and the “Vancouver-Washington” cluster. The media server may provide for display to the user the two sets of related tags to indicate they belong to different clusters corresponding to different subject matter areas, for example.

This is a good example of a black-box process that may be calculated outside of the formal reputation system. Such processes are often housed on optimized machines or running continuously over data samples in order to give best-effort results in real time. In any case, for our model we assume that the output will be a normalized score from 0.0 (no confidence) to 1.0 (high confidence) representing how likely the tag is related to the content. The simple average of all the scores for the tags on this photo are stored in the reputation system so that it can be used to recalculate Photo Interestingness as needed.

- The Negative Feedback process path considers the effects of Flagging events generated for a photo. Though Flickr documentation is nearly non-existent on this topic, it seems reasonable that a small number of Negative Feedback events should be sufficient to neutralize most, if not all, of a high Photo Interestingness score. For illustration, let's say that five events of this type will do the most damage possible, so each would be worth 0.2, cumulatively, a reversible accumulator with a maximum value of 1.0. Note that this model doesn't account for abuse by users ganging-up on a photo and flagging it abusive when it is not. That is a different reputation model, and example of which we illustrate in detail in Chapter_12 : Yahoo! Answers Community Abuse Reporting.

- The last component is via the Republishing path, where the Group Weighting process adds reputation to the photo by considering how much more exposure it is getting through other channels such as blogs and groups. Flickr official forum posts indicate that for the first five or so actions, this value quickly increases to its maximum value - 1.0 in our system. After that it stabilizes, so this process is also a simple accumulator, adding 0.2 for every event and capping at 1.0.

- All of the inputs to Photo Interestingness, a simple mixer, are normalized scores from 0.0 - 1.0 and represent either positive (Viewer Activity Score, Tag Relatedness, Group Weighting) or negative (Negative Feedback) effects on the claim. The exact formulation for this calculation is not detailed in any documentation nor is it clear that anyone who doesn't work for Flickr understands all of it's subtleties. We propose a drastically simplified illustrative formulation: Photo Interestingness is made up of 20% each of Group Weighting and Tag Relatedness plus 60% of Viewer Activity Score minus Negative Feedback. A common early modification to a formulation like this is to increase the positive percentages enough so that no minor component is required for a high score, for example you could increase the 60% above to be 80% and then cap the result at 1.0 before applying any negative effects.

A copy of this claim value is stored in the same high-performance database as the rest of the search-related metadata for the target photo.

- The Interesting Photographer Karma is recalculated each time one of the photographer's photos has its Photo Interestingness reputation changed. It seems that a reversible liquidity-compensated average would be sufficient in the case where this score is used only in Karma Weighting their activities when acting as a viewer of other user's photos.

The Flickr model is pretty complex and has created a lot of discussion and mythology amongst the photographer community on their site. It's important to reinforce the point that all that work is in a limited context: Influencing photo's search rank on the site, display order on profiles, and being featured on the Explore page. It's that latter context that introduces one more important reputation mechanic: Randomization.

Each day's Photo Interestingness calculations produce a ranked-list of photos. If it were always 100% deterministic, the Explore page could get boring with the same photos by the same photographers always being on at the top of the list. See Chap_4-First_Mover_Effects . Flickr combats this by always adding a random factor to the photos selected: each day the top 500 photos have their order randomized. In effect, the photo with the 500th ranked Photo Interestingness score could be shown first and the highest Photo Interestingness ranked could be shown last. The next day they, if still on the top 500 list, they could both be mixed into the middle.

This has two wonderful effects:

- A more diverse set of high-quality photos and photographers get featured, encouraging more participation by the users producing the best content.

- It is abuse mitigation - since the Photo Interestingness claim value is not displayed, with randomness, it cannot be deduced simply by observation. This makes it nearly impossible to reverse engineer the specifics of the reputation model - there is simply too much noise in the system to be certain of the effects of smaller contributions to the score.

When and why simple models fail: The joy and curse of social collaboration

Probably the greatest thing about social media is that the users themselves are creating the media for which you the site operator are capturing value. But this is a double edged sword,since it now means that the quality of your site is directly correlated to the quality of the content created by these users. Sure the content is cheap. But you get what you pay for and you will probably need to pay more in order to improve the quality. And that's before you even consider the fact that some people have a different set of incentives than you'd like.

We offer design advice to mitigate these problems, as well as suggestions for specific non-technical solutions.

The User: Your friend, your enemy

As illustrated with the real-life models above, reputation can be a successful motivation for users to contribute large volumes and/or high-quality behavior/content to your application. At the very least, it can provide critical money-saving value to your customer care department by allowing users to prioritize the bad content for attention and likewise flag power users and content to be featured and for other special support.

But, mechanical reputation systems, of necessity, are always subject to unwanted/unanticipated manipulation: They are only algorithms and lack the subtlety to understand the fact that people have many, sometimes conflicting, motivations for their behavior in the application. One of the strongest motivations that invade reputation systems with a vengeance is commercial. Spam invaded email. Marketing firms invade movie review and social media sites. And dropshippers are omnipresent in eBay:

eBay dropshippers put the middleman back into the online market: they are people who re-sell items that they don't even own. It works roughly like this:

- A seller develops a good reputation, a Merchant Feedback Karma of at least 25 selling items they personally own.

- Buy some dropshipping software, which helps locate items for sale on eBay and elsewhere cheaply, and join an online dropshipping service that has the software and presents the items in a web interface.

- Find cheap items to sell, list them on eBay for a higher price than you can buy them, but lower than other eBay users are selling them for. Be sure to include an average to above-average shipping and handling charge.

- Sell the item to a buyer, receive payment and then send the order, along with their smaller payment, on to the dropshipper (D) who will deliver it directly to the buyer.

This model of doing business is not anticipated by the eBay Merchant Feedback Karma model, which only talks about buyers and sellers. But, dropshippers are a third party in what was assumed to be a two-party transaction and they cause the reputation model to break in the following way:

- The original shippers sometimes fail to deliver goods as promised to the buyer, who gets mad and leaves negative feedback: the dreaded Red Star. That would be fine, but it is the seller - who never saw or handled the good - that receives the mark of shame, not the actual shipping party.

- This is a big problem for the seller, who can not afford the negative feedback if they are going to continue selling on eBay, but it is useless to return the defective goods to them. Trying to unwind the shipment (return the item, return it to the dropshipper - if that is even possible, purchase or wait for a replacement item, and ship it) usually takes too long for the buyer, who expects immediate recompense.

- In effect, the seller can't make the order right with the customer without refunding the purchase price in a timely manner. This puts them out-of-pocket for the price of the goods along with the hassle of trying to recover from the dropshipper.

- But that sometimes isn't enough for the buyer, especially if seller first tried to save a few bucks and actually (unsuccessfully) to deliver alternate goods within the angry customers time window.

- Negative feedback is seen as so costly to sellers that people who do dropshipping have been known to not only eat the price of the goods, but additionally pay a cash bounty of up to $20.00 to get buyers to flip a red star to green.

What is the cost to clear negative feedback for dropshipped goods? The cost of the item + $20.00 + the overhead of negotiating with the buyer. This is the cost reputation imposes on dropshipping on eBay.

The lesson here is that a reputation model will be re-interpreted by your users as they find new ways to utilize your application - operators need to keep a wary eye on the specific behavior patterns they see emerging and adapt accordingly. Chapter_10 provides more detail and specific recommendations for prospective reputation modelers.

Keep Your Barn Door Closed (But Expect Peeking)

You will-at some point-be faced with a decision about how open to be (or not be) with the details of your reputation system. Exactly how much of your models' inner workings should you reveal to the community? Users will inevitably want to know:

- What reputations is the system keeping? (Remember, not all reputations will be visible ones-see Chap_8-Corporate_Reputations .)

- What are the inputs that feed into those reputations?

- How are they weighted? (Put another way: “What are the important inputs?”)

This decision is a not-at-all trivial one: if you err on the side of extreme secrecy, then you risk damaging your community's trust in the system that you've provided. Your users may come to question its fairness or-if the inner workings remain too opaque-they may flat-out doubt the system's accuracy.

Most reputation-intensive sites today attempt to at least alleviate some of the community's curiosity about how content- and user-reputations are earned. It's not like you can keep your system a complete secret.

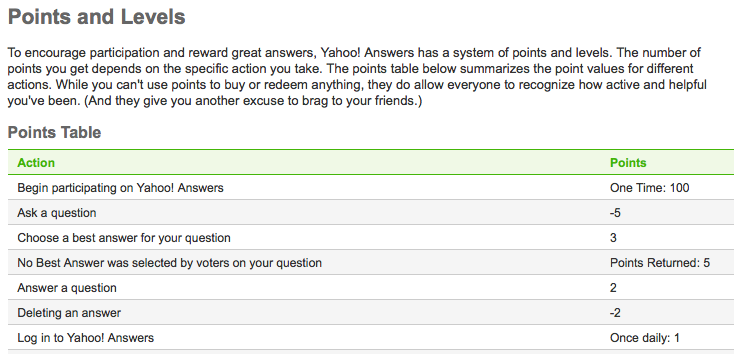

Equally as bad, however, is divulging too much detail about your reputation system to the community. And more site designers probably make this mistake, especially in the early stages of deploying the system and growing the community. Consider Yahoo! Answers highly-specific breakdown of actions on the site, and the resulting points that are rewarded for each (See Figure_5-Z ).

Why might this be a mistake? For a number of reasons. Assigning overt point values to specific actions goes beyond enhancing the user experience and starts to directly influence it. Arguably, it may tip right over into the realm of dictating user behavior. This is generally frowned upon.

It also arms the malcontents in your community with exactly the information they need to deconstruct your model. And they won't even need to guess at things like relative weightings of inputs into the system: the relative value of different inputs is right there on the site, writ large! Try, instead, to use language that is clear and truthful without necessarily being comprehensive and exhaustibly complete.

Some language both understandably clear and appropriately vague

The exact formula that determines medal-achievement will not be made public (and is subject to change) but, in general, it may be influenced by the following factors: community response to your messages (how highly others rate your messages); the amount of (quality) contributions that you make to the boards; and how often and accurately you rate others' messages.

This does not mean, of course, that some in your community won't continue to wonder, speculate and talk amongst themselves about the specifics of your reputation system. Algorithm-gossip has become something of a minor sport on collaborative sites like Digg and YouTube.

This is not surprising: for some participants, guessing and gaming at influencing reputations like 'Highest Rated' or 'Most Popular' is probably just that-an entertaining game and nothing more. Others, however, see only the benefit of any insight they might be able to gain into the system's inner workings: greater visibility for themselves and their content; more influence within the community; and the greater currency that follows both. (See Egocentric Incentives, Chap_6-Egocentric_Incentives )

Here are some helpful strategies for masking the inner workings of your reputation models and algorithms.

Decay and Delay

Time is on your side. Or it can be, in one of a couple of ways. First, consider the use of time-based decay in your models: recent actions “count for” more than actions in the distant past and the effects of older actions decay (lessen) over time. There are several benefits to incorporating time-based delays.

- Reputation-leaders can't “coast” on their laurels. When reputations decay from non-use, they have to be constantly earned. This encourages your community to stay active and engage with your site frequently.

- Decay is an effective counter to the natural stagnancy that comes from network effects (See Chap_4-First_Mover_Effects .) Older, more established participants will not tend to linger at the tops of rankings quite as much.

- Those who are probing the system to gain an unfair advantage will not reap long-term benefits from doing so. Unless they continue to do so, within the constraints imposed by the decay. (This profile of behavior makes it easier to spot-and correct for-gamers.)

Delaying the results of newly-triggered inputs is also beneficial. If there's a reasonable window of time between the triggering of an input (Favoriting a Photo, for instance) and the resulting effect on that object's reputation (moving the photo higher in a visible ranking) then it confounds a gamer's ability to do easy 'what if' comparisons. (Particularly if the period of delay is itself unpredictable.)

When the reputation effects of various actions are instantaneous, then you've given the gamers are powerful analytic tool for reverse-engineering your models.

Provide a Moving Target

We've already cautioned you to keep your system flexible (See Ch03-Plan_For_Change .) It's not just good advice from a technical standpoint, but from from a social & strategic one as well. Put simply: leave yourself enough 'wiggle room' to adjust the impact of different inputs into the system (add new inputs, change their relative weightings, or eliminate ones that were previously considered.) This gives you an effective tool for confounding gaming of the system. If you suspect that a particular input is being exploited, you at least have the option of tweaking the model to compensate for the abuse. You will also want the flexibility of introducing new types of reputations to your site (or retiring ones that are no longer serving a purpose.)

It is tricky, however, to enact changes like these without impacting the social contract you've established with the community. Once you've codified a certain set of desired behaviors on your site, some people will (understandably) be upset if the rules seemingly change out from under them. This is yet another argument for 'staying vague' about the mechanics of the system, or downplaying the importance of your reputation system.

Reputation from Theory to Practice

The first half of this book, up through this chapter, has focused on theory. We've focused on understanding reputation systems through defining the key concepts, creating a visual grammar for how they work, providing a technical description of an actual execution environment, defining a set of key building blocks which we used to construct the most common simple models, and culminating in some detailed models for popular complex reputation in the wild.

Along the way we sprinkled in practitioner's tips about important lessons learned from operational reputation systems in order to prepare you for the second half: Applying the theory to a specific application: Yours!