Table of Contents

Building Blocks and Reputation Tips

Extending the Grammar - Building Blocks

By this point, you should be feeling fairly conversant in the lingua of reputation systems, and you've had some exposure to their constituent bits and pieces. We've detailed reputation statements, messages, and processes somewhat and you've even seen some fairly rudimentary (but serviceable!) models. In this chapter, we'll “Level Up” a bit and explore reputation claims in greater detail (and describe a taxonomy of claim types.)

We'll also explore reputation Roll-ups: these are the actual computations we'll perform on incoming messages to affect a particular output. In effect, different types of roll-ups yield very functionally different types of reputations, so-of course-we'll provide guidance along the way for which to use when.

Finally, we'll end with a smattering of very practical advice, a section of Craftsman's Tricks. Once you begin to think through the architecture and design of your own system, we've found that you will inevitably have to wrestle with the issues addressed in this section. (Or, perhaps more regrettably, you'll fail to wrestle with them in the design phase and end up with a band-aided approach down the road in production.)

The Data: Claim Types

Remember, a fundamental component of a Reputation Statement is the claim-this is an assertion of quality that a source makes about a target and in Chap_1-The_Reputation_Statement , we discussed how claims can either be explicit (a direct statement of quality, intended by the statement's source to act as such) or implicit (an activity related to an object, from which we infer the source's interest in the target object.) These fundamentally different approaches are important because it's the combination of the two, implicit and explicit claims, that can yield some very nuanced and robust reputation models. We should pay attention to what people say, but give equal weight to what they do, to determine where the community's interest is focused in a pool of content.

Claims can also have different types. It is a helpful distinction to draw as well between Qualitative claims (claims that attempt to describe one or more qualities of a reputable entity, but may- or may-not be easily measured) and Quantitative claims-claims that can be measured (and, in fact, are largely generated, communicated and read back as numbers of some kind.)

Reputation statements have Claim Values: you can generally think of these as “what you get back when you ask for a reputation's current state.” So, for instance, we can always query the system for Movies.Review.Overall.Average and get back a normalized score within the range of 0-1.

Note that the format of a claim does not always map exactly to the format that you may wish to display (or, for that matter, gather) that claim in. It's more likely that you'd want to show your users a scalar number (“3 out of 5 stars”) for a movie's average review.

Qualitative Claim Types

Qualitative claims attempt to describe some quality of a reputable object. This quality may be as general as the object's overall “Quality” (“This is an excellent restaurant!”) or as specific as some particular dimension or aspect of the entity. (“The cinematography was stunning!”) Generally, qualitative claim types are fuzzier than hard quantitative claims, so-quite often-qualitative claims end up being useful implicit claims.

This is not to say, however, that qualitative claims can't have a qualitative value when considered én masse: almost any claim type can at least be counted, and displayed as some form of a simple cumulative score (or 'Aggregator'-we discuss the various reputation Roll Ups below in Chap_4-Roll-Ups .) So while we can't necessarily assign an evaluative score to a user-contributed text comment, for instance (at least-not without the rest of the community involved) it's quite common on the Web to see a count of the number of comments left about an entity, as a crude indicator of that item's popularity or interest-level.

So what are some common, useful qualitative claims?

User-contributed comments are perhaps the most common, defining feature of the social Web. Though the debate rages back and forth about the value of said comments (and, let's be fair-that value differs from site to site, and community to community) no one denies that the ability to leave text comments about a piece of content, be it a blog entry, an article or a YouTube video, is a wildly popular form of expression on the web.

Comments are typically provided to users as a free-forms means of expression: a little white box that the commenter can populate with whatever they choose. Better social sites will attempt to direct these expressions, however, and provide guidelines or suggestions for what is considered on- or off-topic.

It would be a shame not to tap into these comments for the purposes of establishing and maintaining an entity's reputation.

Comments are usually free-form (unstructured) textual data provided by the user. They are typically character-constrained in some way, though these constraints will vary from context-to-context: the character allowance for a message board posting will inevitably be much greater than Twitter's famous 140-character limit.

Comments may or may not accept rich-text entry and display, and there may be certain content-filters applied to comments upfront (you may, for instance, choose to prohibit profanity in comments, or disallow inclusion of fully-formed URLs.)

Comments are often but one component of a larger compound reputation statement. Movie reviews, for instance, are a combination of 5-star qualitative claims (and perhaps different ones for particular aspects of the film) and one or more free-form comment type claims.

Comments are powerful reputation claims when interpreted by humans, but may not be quite as easy for automated systems to evaluate.

Evaluating text comments will vary slightly by context. If a comment is but one sub-component of user review, then the comment can contribute to a 'completeness' score for that review: reviews with comments are deemed more complete than those without (and, in fact, the comment field may be required for the review to be accepted at all.)

In the case of comments directed at another contributor's content (user comments about a photo album, or message board replies to a thread) consider evaluating comments as a measure of interest or activity around that reputable entity.

- Flickr's Interestingness algorithm (likely) accounts for the amount and velocity of commenting activity around a photo to determine its quality.

- On Yahoo! Local it's possible to give an establishment a full Review (with Star Ratings, free-form comments, and bar-ratings for sub-facets of your experience.) Or you can leave just a quick Rating of 1-5 stars. (This option is to encourage quick engagement.) If Local keeps an internal, corporate reputation tracking the value of these reviews, you can imagine that there's greater business value (and utility to the community) in full reviews with well-written text comments.

In our research at Yahoo! we often probed notions of 'authenticity' in an attempt to determine how a reader interprets the veracity of a claim or evaluates the authority or competence of a claimant.

We wanted to know: when people read reviews online (or blog entries, or tweets) what are the specific cues that make them more likely to accept what they're reading as accurate? Is there something about the presentation of the material that makes it more trustworthy? Or perhaps its the presentation and attribution of the content author? (Does an 'Expert' badge convince anyone?)

Overwhelmingly, time and again, we found that it's the content itself - the review, entry or comment being evaluated - that makes the decision in readers' minds. If an argument is well-stated, seems reasonable, and if the reader can find some aspect of it that they agree with, then readers are more likely to trust the content. No matter what meta-embellishment and framing you provide around it.

Conversely, poorly-written reviews, with typos or shoddy logic are not seen as legitimate and trustworthy reviews. People really do pay attention to the content of what is written.

There are other types of meta-data (besides free-form textual) that we can derive reputation value from. Any time a user uploads some media-either in response to another piece of content, or as a sub-component of the primary contribution itself-then that activity is worth noting.

We're separating these types of metadata from text for two reasons:

- Text comments or responses are typically entered in context (right there in the browser as a visitor interacts with your site) whereas media uploads usually require a slightly deeper level of commitment and planning. Perhaps they require an external device and editing of some kind before submission. Therefore…

- You may want to weight these types of contributions differently than text comments. (Or not. It depends on the context.)

Media uploads are generally any discrete piece of metadata that is not textual in nature.

- Video

- Images

- Audio

- Collections of any of the above

When the media object is in response to another piece of content, then consider it as an input into the level of activity or interest for that item.

When the upload is an integral part of a content submission, then factor its presence or absence as a part of the quality for that entity.

Examples of media uploads include:

- YouTube's video responses

- A restaurant review site may attribute greater value to a review that features uploaded pictures of the reviewers' meal: it makes for a compelling display and gives a more-rounded view of that reviewer's dining experience.

A sometimes-useful claim is the presence or absence of inputs external to the reputation system altogether. Reputation-based search relevance algorithms (yes, yes, we already indicated that these lie outside the scope of this book) like Google PageRank rely on these types of claims.

A common format for this is an URL to an externally-reachable and verifiable piece of supporting data for this claim. This includes embedding web 3.0 media widgets into other claim types, such as text comments.

When the external object is provided by a community-member in response to another piece of content, then consider it as an input into the level of activity or interest for that item.

When the external reference is provided by the content author an integral part of a submission, then factor its presence or absence as a part of the quality (or level of completion) for that entity.

- Some shopping review sites encourage cross-linking to other products or off-site resources as an indicator of review completeness: it demonstrates that the review author has done their homework and fully considered all options.

- In the blogging world, the TrackBack originally had some value as an externally-verifiable indicator of a post's quality or interest level. (Sadly, though, TrackBacks have been a highly-gamed spam mechanism for years.)

Quantitative Claim Types

Now these claims are the nuts & bolts of modern reputation systems, and they're probably what you think of first when you think of methods for assessing or expressing an opinion about the quality of an item. Quantitative claims can be measured (in fact, by their very nature, they are measurements.) Therefore, computationally and conceptually, they are easier to deal with in our reputation systems.

Normalized Value

This is the most common type of claim in a reputation system, and it always has a value within a range from 0.0-1.0.

The strength of this claim type is its general flexibility: it's the easiest of all quantitative types to perform math operations on; it's the only quantitative claim type that is finitely bounded; and reputation inputs gathered in a number of different formats can be normalized with ease (and then de-normalized back to a display-specific form suitable for the context you want to display in.)

Another strength of normalized values is the general utility of the format: normalizing the data is the only way to perform cross-object & cross-reputation comparisons with any sense of certainty. (Will your application want to display “5 star restaurants” in a consistent fashion alongside “4-star hotels?” Then you'd better be normalizing those scores somewhere!)

Also valuable is the readability of the format, because the bounds of a normalized score are already know, they are very easy (for you, the system architect, or others with specific access to the data) to read at a glance. With normalized scores, you do not need to understand the context of a score to be able to understand its value.

- Normalized values are always floating point numbers within a range from 0.0 to 1.0.

- You will rarely (never?) display a normalized value to your end-users. Generally, these values are de-normalized into an appropriate display format for your application context. (Converted back to stars, for instance.)

Very little interpretation is needed with a normalized claim. Within the range of 0.0 to 1.0, closer to 0 is worse, closer to 1 is better.

Rank Value

A positive integer, unique and limited to the number of targets in a bounded set of targets. So, for a data set of “100 Movies from the Summer of 2009”, it is possible to have a ranked list wherein each film has one and exactly one value within that set.

Rank values can be a useful optimization for slicing-and-dicing large collections of reputable entities: perhaps you want to quickly construct a list of only the Top 10, 20 or 100 objects in a set.

A rank value is a positive integer, no larger than the count of the object-set that contains the reputable entity.

- Use items' ranks for quick one-to-one comparisons of like items

- Build a ranked list of objects wthin a collection

Examples of rank values in the wild:

- Amazon Sales Rank

- Leaderboards

Scalar Value

When you think of scalar rating systems, we'd be surprised if-in your mind-you're not seeing 'Stars.' 3- 4- and 5-star rating systems abound on the Web and have achieved a certain level of semi-permanence in these types of systems. Perhaps because of the ease with which users can engage with Star Ratings (they're a nice way to express an opinion beyond simple like or dislike.)

More generally, Scalar Ratings are a type of reputation claim that gives a value to 'grade' an entity somewhere along a bounded spectrum. The spectrum may be finely delineated and allow for many gradations of opinion (10-Star ratings are not unheard of) or they may be binary in nature. (Thumbs-up/Thumbs-down for instance.)

- Star Ratings (3-, 4- and 5-stars are common)

- Letter-Grade (A, B, C, D, F)

- Novelty-type themes (“4 out of 5 cupcakes!”)

Yahoo! Movies features letter-grades for reviews comprised of a combination of professional reviewers' scores (these are transformed from a whole host of different claim types, from the NYTimes letter-grade style to the classic Siskel & Ebert Thumbs-Up/Thumbs Down.) Yahoo! user reviews, which are gathered on a 5-star system, also inform the overall grade for a Movie.

Processes: Computing Reputation

Every reputation model is made up of the inputs, processes, and outputs. The processes themselves perform different tasks: rollups do calculation and storage of interim results, transformers change data from one format to another, and routers handle input and output as well as decision making to direct the traffic between processes. Note that these individual process descriptions are represented as discrete process boxes, but in practice the actual implementation of a process in an operational system will actually combine multiple roles: For example, a single process may take input, do a weighted mixer calculation, send the result as a message to another process, and also return the value to the calling application and terminate that branch of the reputation model.

Rollups: Counters, Accumulators, Averages, Mixers, and Ratios

Rollup processes are the heart of any reputation system - it's where the primary calculation and storage of reputation statements is performed. There are several generic kinds of rollups which serve as abstract templates for the actual customized versions in operational reputation systems. Each type: Counter, Accumulator, Averages, Mixer, and Ratio represents the most common simple computational unit in a model - practical implementations almost always add additional computation to these simple patterns.

All processes recieve one or more inputs that include a reputation source, target, contextual claim name, and a claim value. Unless otherwise stated, the input claim value is a normalized score. All processes that generate a new claim value, such as rollups and transformations, are assumed to be able to forward the new claim value to another process, even if this is not indicated on the diagram. By default in rollups, the resulting computed claim value is stored in a reputation statement with the aggregate source reputation statement for the target with the claim context + the rollup name. For example, Movies_Acting_Rating becomes Movies_Acting_Average.

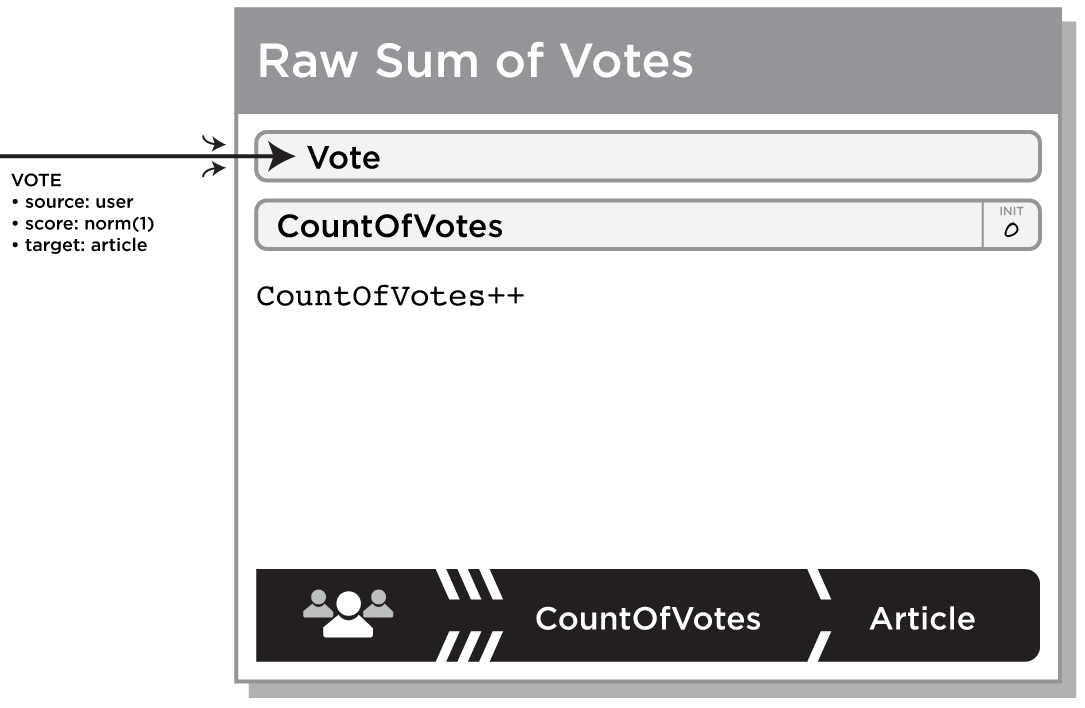

Increments a stored numeric claim representing all the times it received any input.

Simple Counter, like all processes, requires an input message to activate, but it ignores any supplied claim value. Once it receives the input message, it reads (or creates) and increments the CountOfInputs which is stored as the claim value for this process.

| Pros | Cons |

|

Counters are simple to maintain and can be easily optimized for high performance. |

No way to recover from abuse. If this is needed, see Chap_4-Reversible_Counter Counters tend to continuously increase over time, tending to deflate the value of individual contributions - See Chap_4-Bias_Freshness_and_Decay Counters, especially when used in public reputation scores and leaderboards, are the most subject to Chap_4-First_Mover_Effects . |

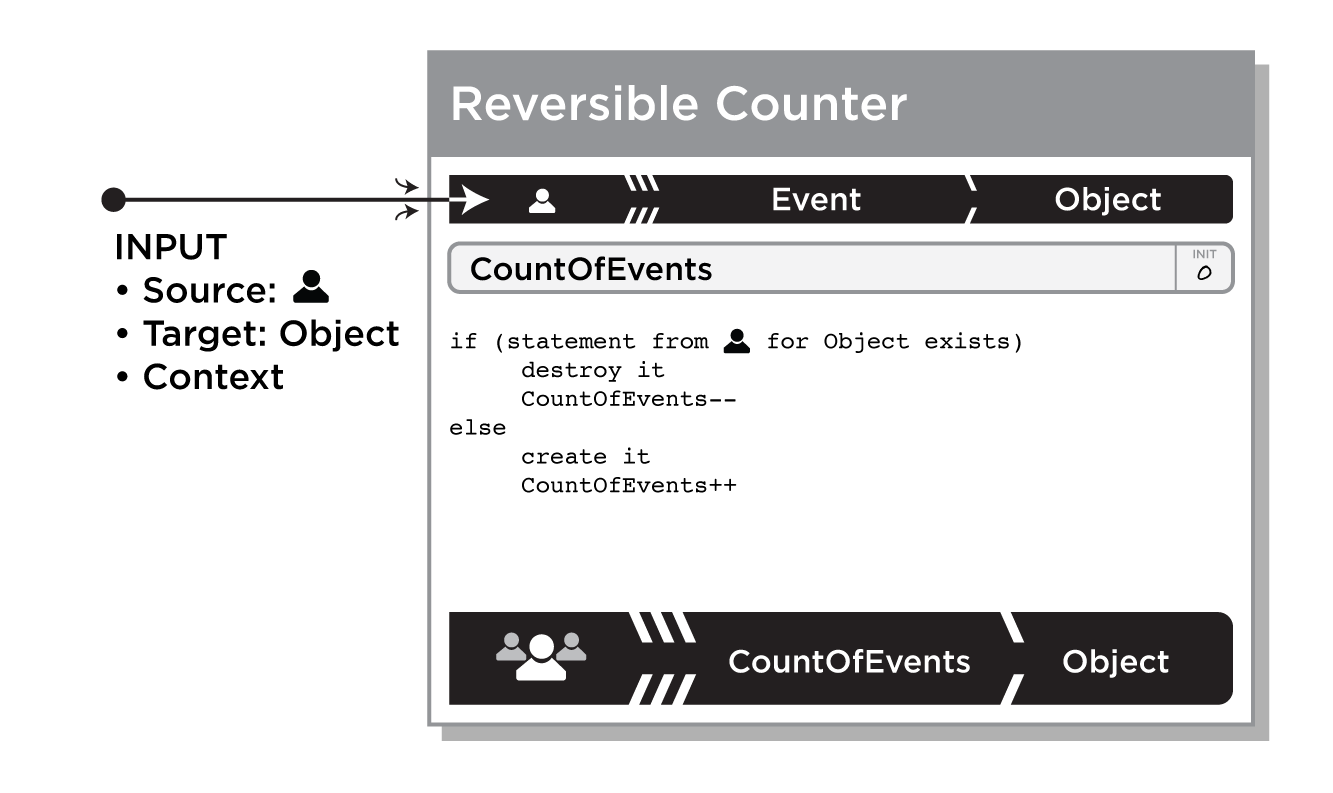

A Reversible Counter, like all processes, requires an input message to activate, but it ignores any supplied claim value. Once it receives the input message, it either increments or decrements a stored numeric claim depending on whether or not there is already a stored claim for this source and target.

Reversible counters are useful when there is a high probability of abuse (because of commercial incentive benefits such as contests - See Chap_6-Commercial_Incentives ) or when you anticipate the need to rescind inputs by users or the application for other reasons.

| Pros | Cons |

|

Counters are easy to understand. Individual contributions can be automatically done - allowing correction for abusive input and for bugs. Allows for individual inspection of source activity across targets. |

Scales with database transaction rate - at least twice as expensive as Chap_4-Simple_Counter . The equivalent of keeping a log file of every event. Counters tend to continuously increase over time, tending to deflate the value of individual contributions - See Chap_4-Bias_Freshness_and_Decay Counters, especially when used in public reputation scores and leaderboards, are the most subject to Chap_4-First_Mover_Effects . |

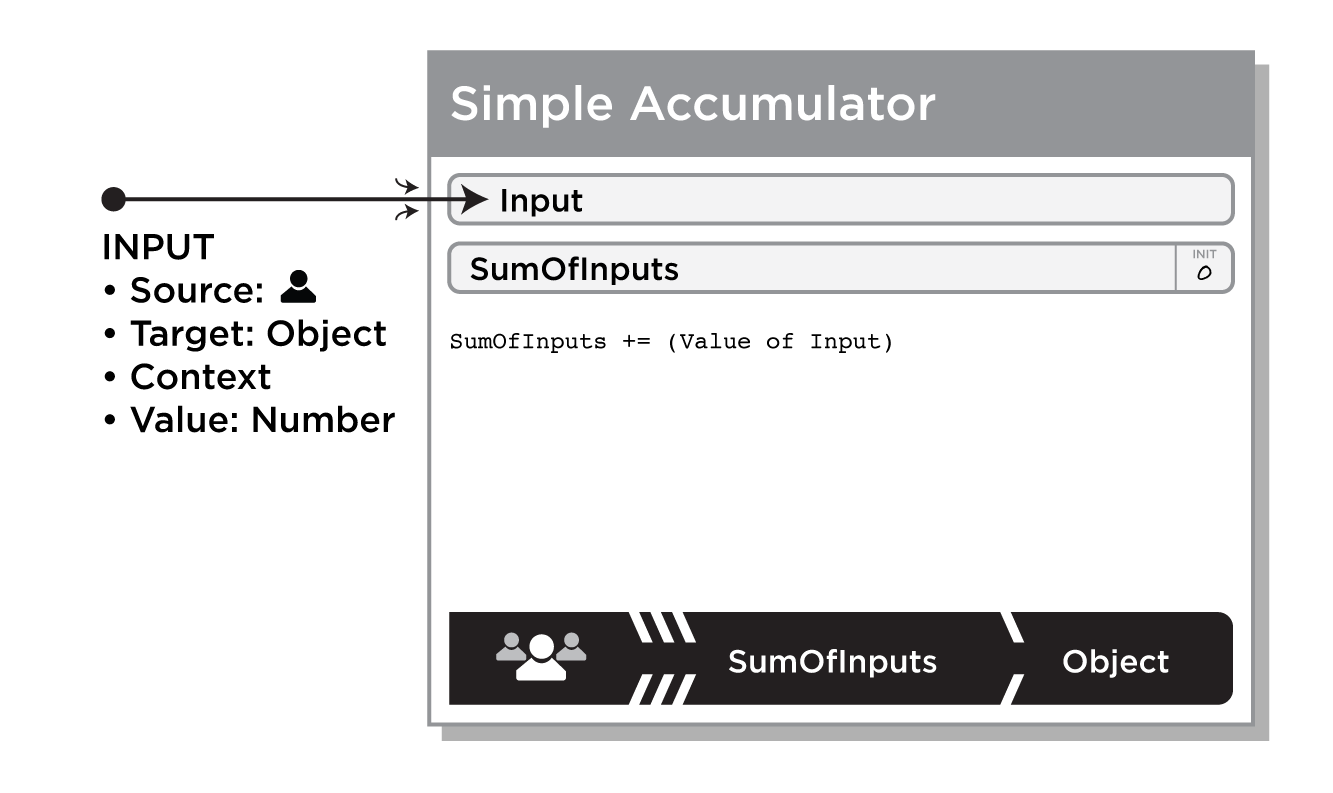

Adds a single numeric input value to a running sum that is stored in a reputation statement.

| Pros | Cons |

|

As simple as it gets - the sums of related targets can be mathematically compared for ranking. Low storage overhead for simple claim types: the system need not store each users' inputs. |

Older inputs can have disproportionate value. No way to recover from abuse. If this is needed, see Chap_4-Reversible_Accumulator If both positive and negative values are allowed, it becomes difficult to have any confidence in the meaning of the comparison of the sums. |

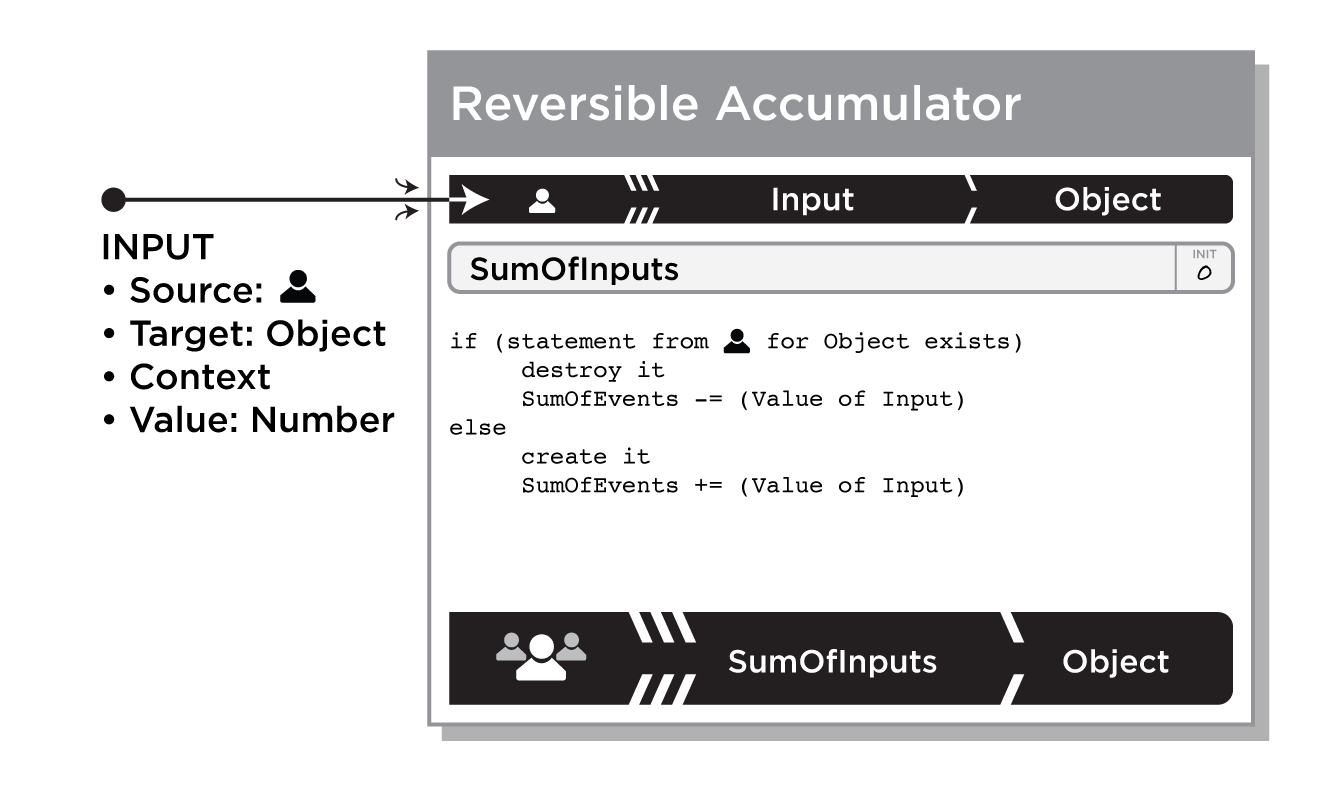

This process either 1) stores and adds a new input value to a running sum, or 2) removes the effects of a previous addition. Any application that would otherwise use a Chap_4-Simple_Accumulator , but wants the option to either review how individual sources are contributing to the Sum or want to be able to undo the effects of either buggy software or abusive use behavior should consider using Chap_4-Reversible_Accumulator instead. The reason to prefer Chap_4-Simple_Accumulator instead is scale: storing a reputation statement for every contribution can be prohibitively database intensive if you expect a very large amount of traffic.

| Pros | Cons |

|

Individual contributions can be automatically done - allowing correction for abusive input and for bugs. Allows for individual inspection of source activity across targets. |

Scales with database transaction rate - twice as expensive as Chap_4-Simple_Accumulator . Older inputs can have disproportionate value. If both positive and negative values are allowed, it becomes difficult to have any confidence in the meaning the comparison of the sums. |

Calculates and stores a running average including new input.

The Simple Average process is probably the most common reputation score. The process calculates the mathematical mean of a series of the history of inputs. It's components are a SumOfInputs, CountOfInputs and the process claim value:AvgOfInputs.

| Pros | Cons |

| Easy for users to understand |

Older inputs can have disproportionate value to the average. See Chap_4-First_Mover_Effects No way to recover from abuse. If this is needed, see Chap_4-Reversible_Average Most contexts that use simple averages to compare ratings can suffer from Chap_4-Ratings_Bias_Effects and don't have even rating distributions. When used to compare ratings, simple averages don't accurately reflect group sentiment when there are very few components in the average. See Chap_4-Low_Liqudity_Effects |

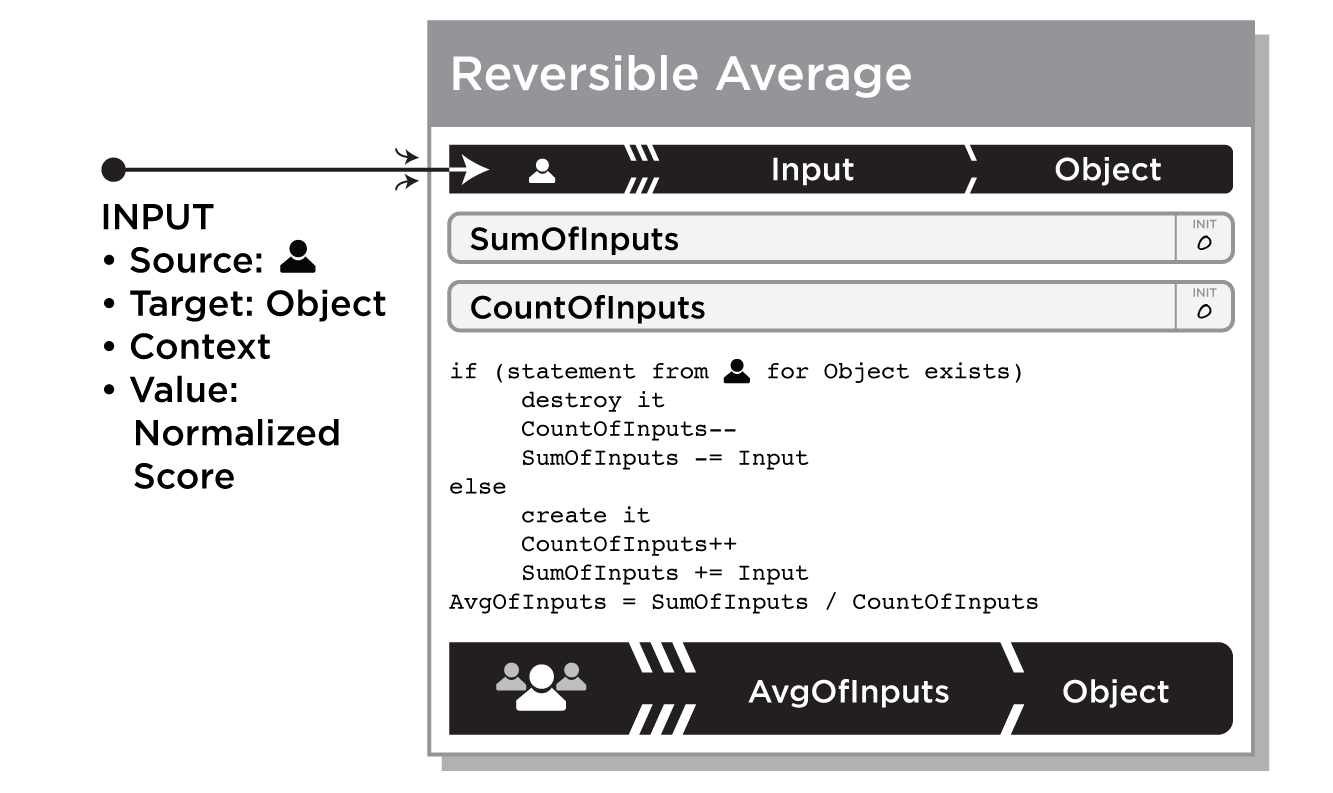

A reversible version of Simple Average that keeps a reputation statements for each input and optionally uses it to reverse the effects if desired.

If a previous input exists for this context, that is operation is a reversal - the previously stored claim value is removed to from the AverageOfInputs, the CountOfInputs is decremented, and the source's reputation statement is destroyed. If there is no previous input for this context, compute a Simple Average (see Chap_4-Simple_Average ) and store the input claim value in a reputation statement by this source for the target with this context.

| Pros | Cons |

|

Easy for users to understand Individual contributions can be automatically done - allowing correction for abusive input and for bugs. Allows for individual inspection of source activity across targets. |

Scales with database transaction rate - twice as expensive as Chap_4-Simple_Average . Older inputs can have disproportionate value to the average. See Chap_4-First_Mover_Effects Most contexts that use simple averages to compare ratings can suffer from Chap_4-Ratings_Bias_Effects and don't have even rating distributions. When used to compare ratings, simple averages don't accurately reflect group sentiment when there are very few components in the average. See Chap_4-Low_Liqudity_Effects |

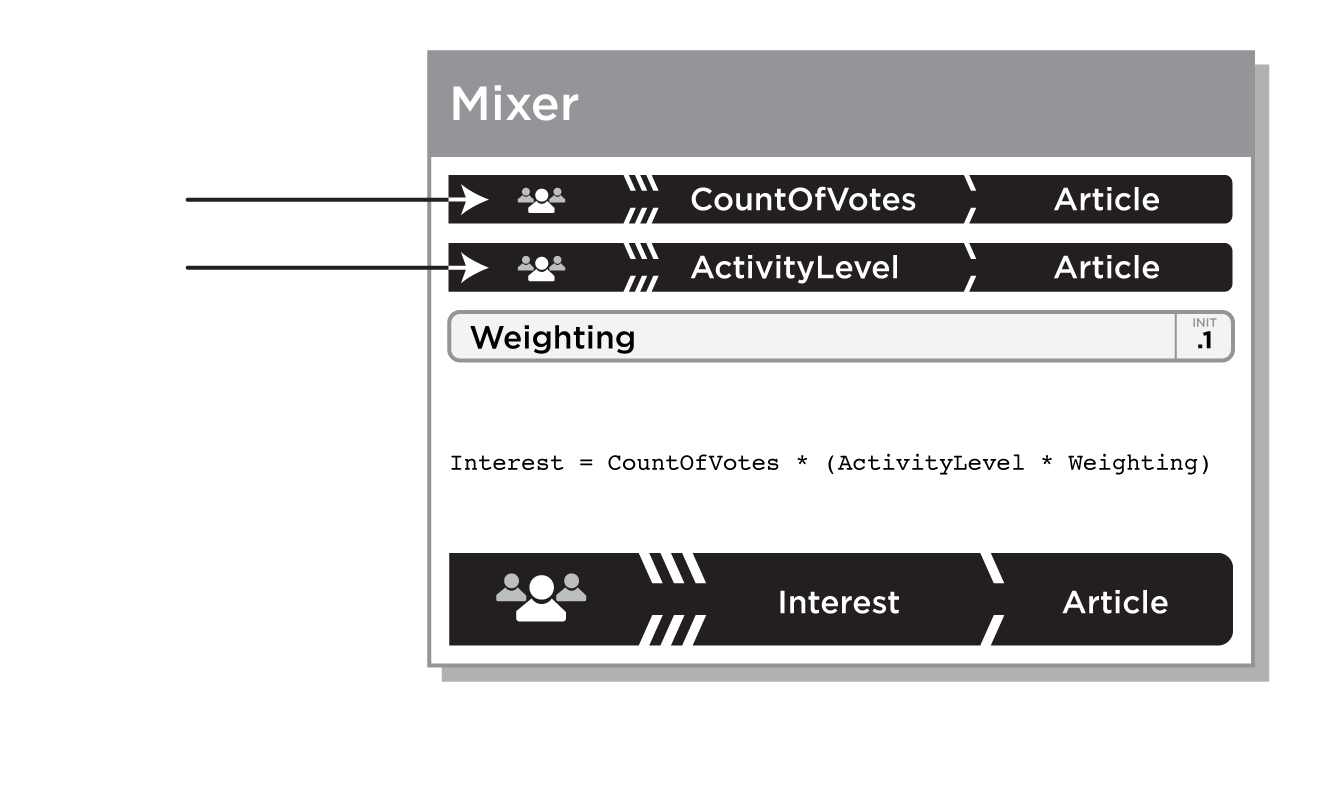

Combines two or more inputs or read values according to a weighting or mixing formula into a single score. Perferably, the input and output value are normalized, but this is not a requirement. Mixers perform most of the custom calculations in complex reputation models.

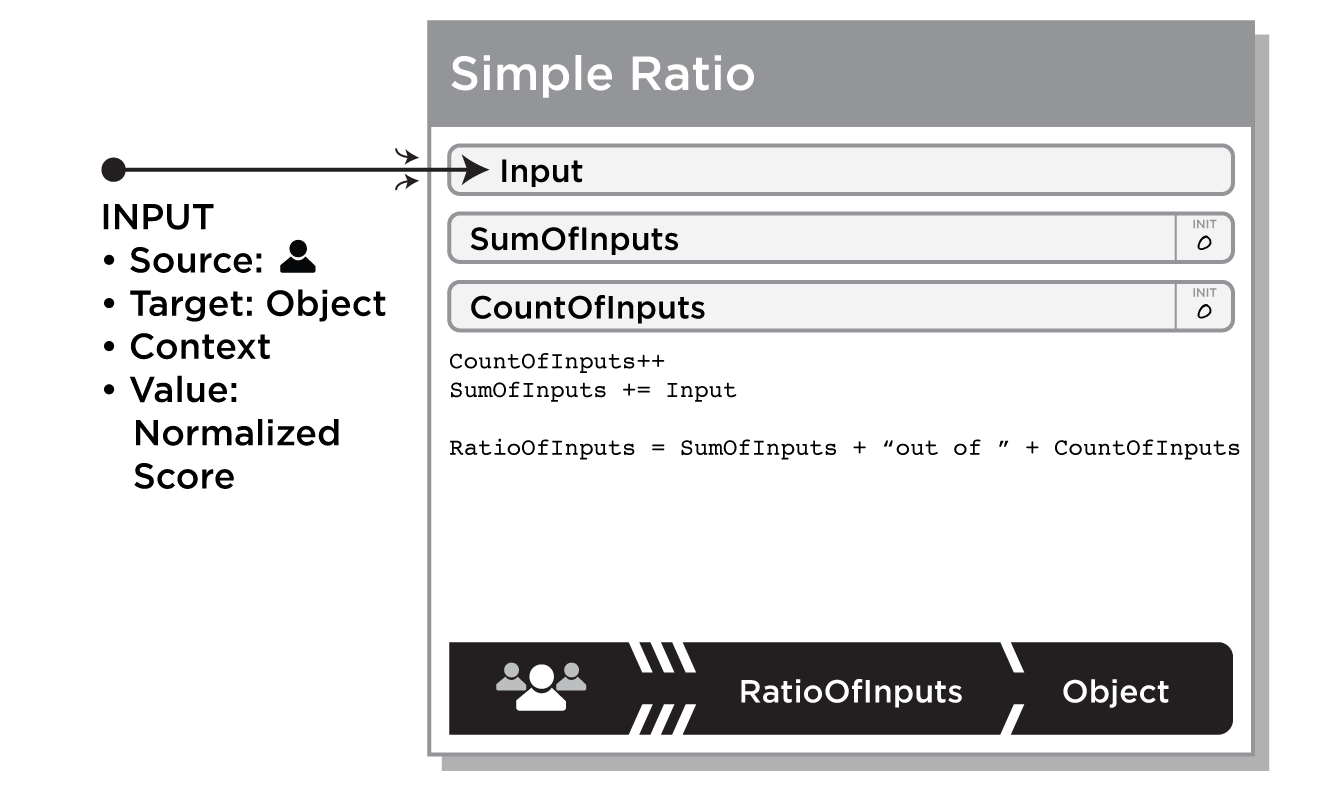

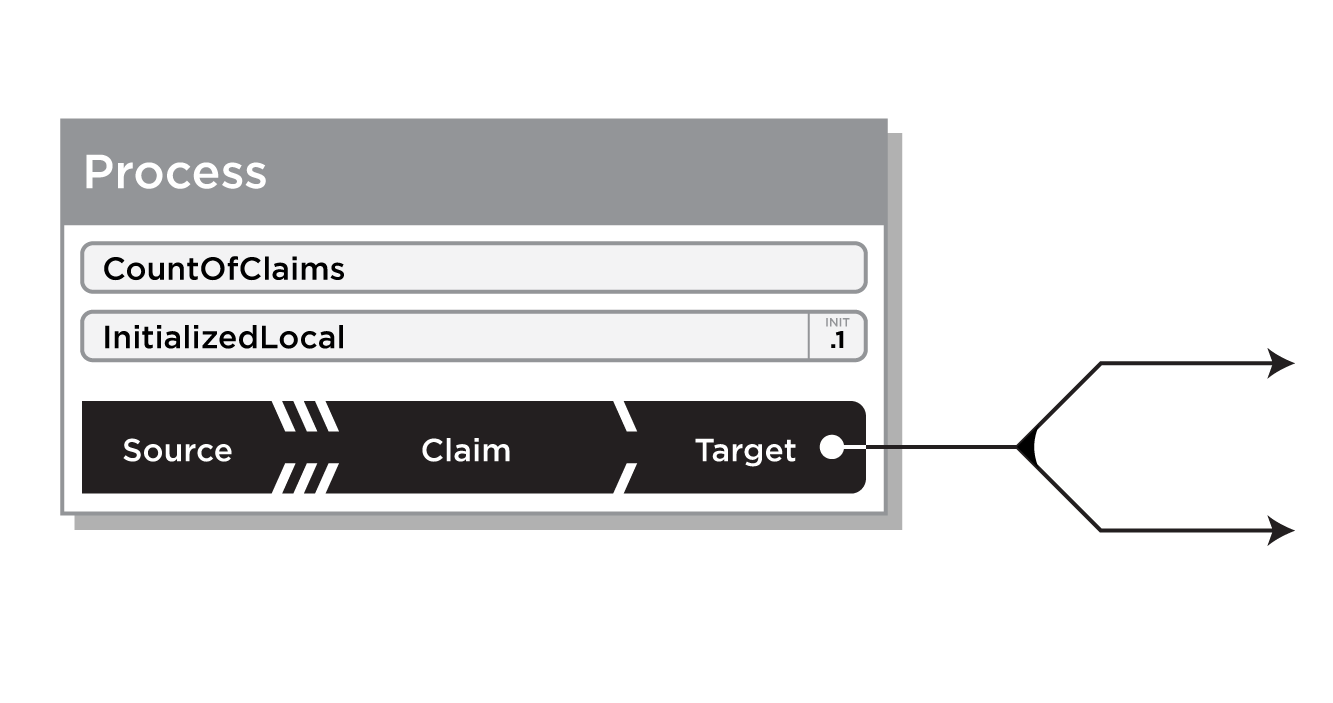

Counts the total number of inputs (total) and separately counts the number of times the input has the exact value 1.0 (hits), and stores the result as a text claim value of “(hits) out of (total)”.

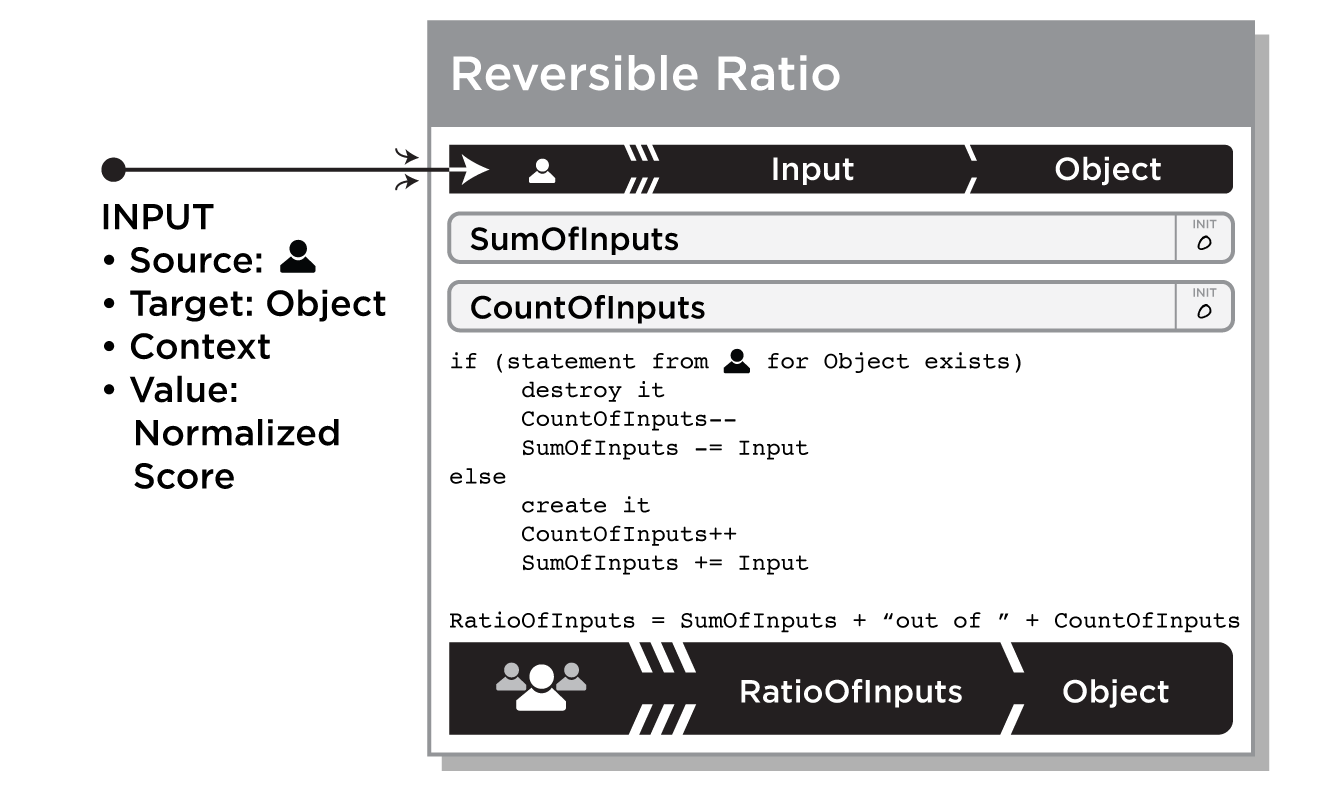

If the source already has a stored input value for this target, reverses the effect of the previous hit. Otherwise, counts the total number of inputs (total) and separately counts the number of times the input has the exact value 1.0 (hits) the result as a text claim value of “(hits) out of (total)” and also stores the sources input value as a reputation statement for this target for possible reversal and retrieval.

Transformers: Data Normalization

Data transformation is essential in complex reputation systems, where information is coming into the model in many different forms. For example, consider IP-Address reputation model that wants to consider both this-email-is-spam vote from users along side incoming traffic rates to the mail server alongside a historical karma score for the user submitting the vote, each value must be transformed to a common numerical range before being combined. When the result is determined, it might be useful to represent the result in a discrete Spammer-DoNotKnow-NotSpammer category determination. Transformation processes do both the normalization and denormalization in this example. Why bother with all this transformation? Why not just deal directly with votes and stars and ratings? See Chap_4-Power_and_Danger_of_Normalization

Simple Normalization (and Weighted Transform)

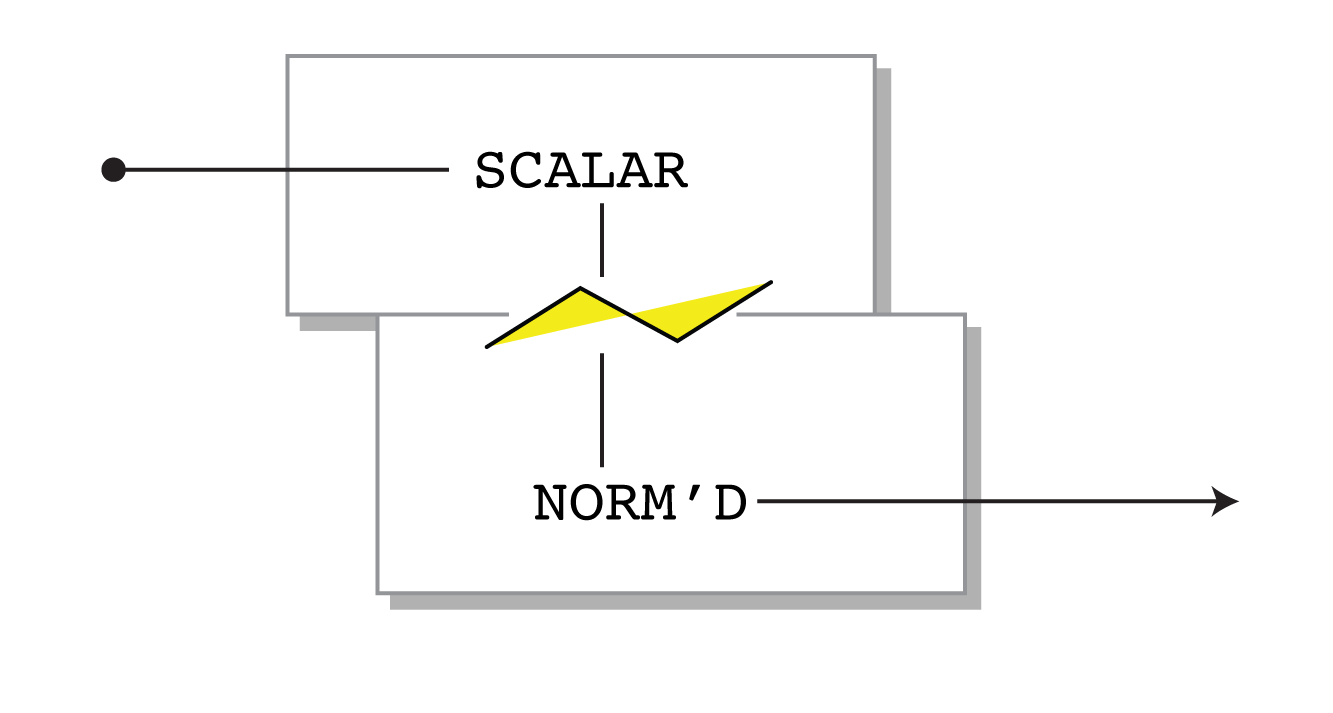

Converting from a usually scalar score to the normalized range of 1.0. Often custom built, and typically accomplished with functions and tables.

Scalar Denormalization

Converting usually normalized values inputs into a regular scale, such as Bronze, Silver, Gold, number of stars, or rounded percentage. Often custom built, and typically accomplished with functions and tables.

External Data Transform

Accessing a foreign database and converting it's data into a locally interpretable score, usually normalized. The example McAfee™ transformation seen in Figure_2-8 shows a table-based transformation from external data to a reputation statement with a normalized score. What makes an external data transform unique is that often retrieving the original value is network or computationally expensive operation so it may be executed implicitly on-demand, periodically, or even only when explicitly requested by a process.

Routers: Messages, Decisions, and Termination

Beside calculating the values in a reputation model, there is important meaning in the way a reputation system is wired together to itself and the calling application: connecting the inputs to the transformers to the rollups to the processes that decide who gets notified of what ever side-effects are indicated by the calculation.

Common Decision Process Patterns

Process types are described here as pure primitives, but we don't mean to imply that your reputation processes can't or shouldn't combine the various types. It completely normal to have a Simple Accululator that applies Mixer semantics.

Simple Terminator

Any process can chose not send any message and optionally return or signal it's result to the application environment.

The basic “If..then..” statement of reputation models. Usually comparing two inputs and sends a message on to another process(es). It is important to remember that the inputs may arrive asynchronously and separately, so the evaluator may need to have its own state.

Terminating Evaluator

This evaluator ends the execution path started by the initial input, usually by returning or sending a signal to the application when some special condition or threshold has been met.

Message Splitter

Replicates an message and forwards it to more than one model event process. This starts multiple simultaneous execution paths for one reputation model - depending on the specific characteristics of the reputation sandbox implementation. See Chapter_3 .

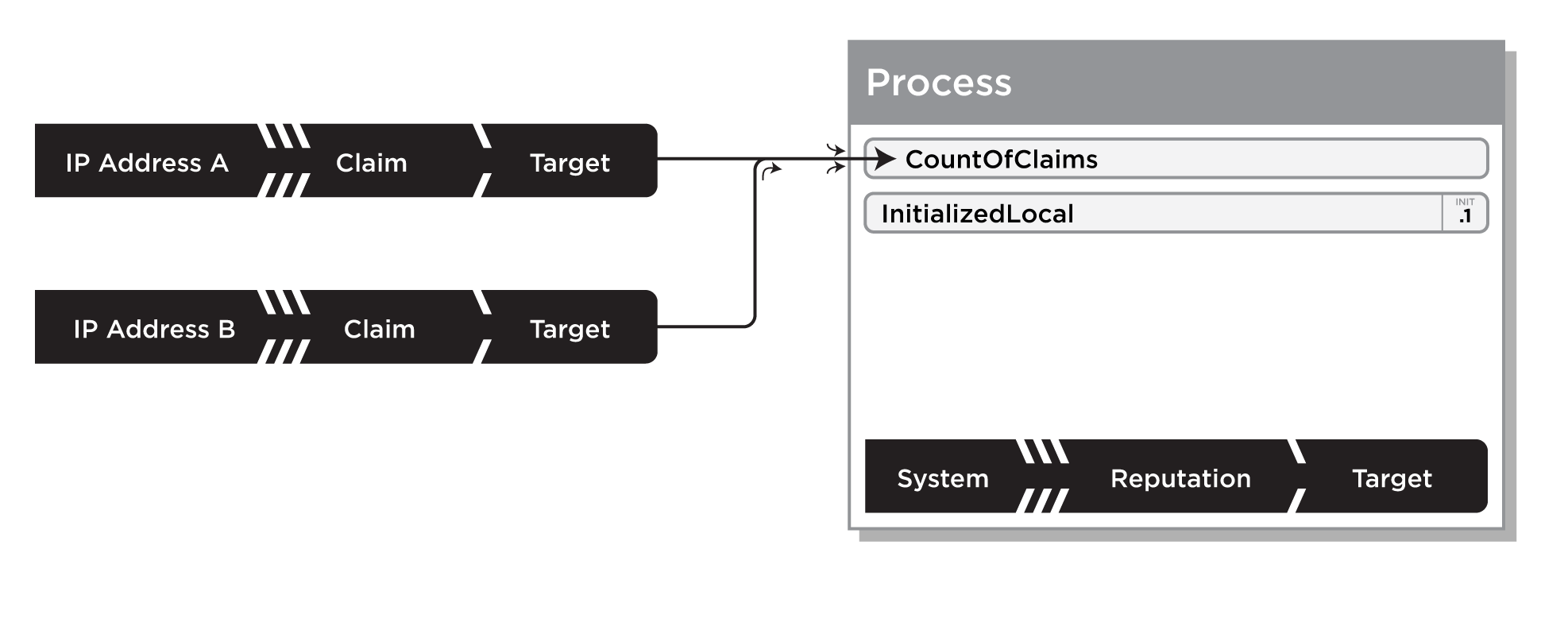

Conjoint Message Delivery

When messages from multiple different input sources with the exact same context, they are treated as if they have the exact same meaning as each other. For example, in a very large scale system, multiple servers may send reputation input messages to a shared reputation system environment reporting on user actions: it doesn't matter which server sent the message, they are all treated the same by the reputation model.

Input

All reputation models are presumed to be dormant unless they are activated by a specific input arriving as a message to the model. Input gets the ball rolling…

Reputation Statements as input

Our diagramming convention shows that reputation statement can be inputs. This isn't strictly true, it is just a notational shorthand for the application creating a reputation statement itself and passing its context, source, claim, and target in a message to the model. Be careful not to confuse this with the case when a reputation statement is the target of an input message, which is covered in detail in Chap_2-Reputation_Targets .

Typical Inputs

Normally, several attributes are required by every message to reputation process: the source, target, and an input value. Often, the contextual claim name and other values, such as a timestamp and reputation process ID are required in order for the reputation system to properly initialize, calculate and store required state.

Periodic Inputs

Sometimes reputation models will need to be activated based on a non-reputation based input, such as a timer that will perform an external data transform. Presently, we provide no explicit mechanism for reputation models to spontaneously wake-up and begin executing, and this has an effect on mechanisms such as those detailed in Chap_4-Decay .

Output

Many reputation models terminate without explicitly returning a value to the application at all - instead the output is stored asynchronously in reputation statements. The application then retrieves the results as reputation statements as they are needed - always getting the best possible result, even if it was generated as the result of some other user on some other server in another country.

Return Values

Simple reputation environments, where all the the model is implemented as serially executed in-line with the actual input actions tend to use call-return semantics: The reputation model is running for exactly one input at a time and runs until it terminates by returning a copy of the rollup value it calculated. Large scale, asynchronous reputation sandboxes, such as that described in Chapter_3 don't return results in this manner, they terminate silently and sometimes send Chap_4-Signals .

Signals: Breaking Out of the Reputation Sandbox

When a reputation model wants to notify the application environment that something significant has happened and special handling is required, it sends a signal: a message that breaks out of the reputation sandbox. The specific mechanism of this signalling is specific to the sandbox implementation, but is represented consistently using the arrow-out-of-box image in our diagramming grammar.

Logging

Stores a copy of the current score in an external store with an asynchronous write. This is usually the result of an Evaluator deciding a significant event requires special output. For example, a user karma score has reached a new threshold and the hosting application should be notified to send a congratulatory message to the user.

Craftsman's tips: Reputation is Tricky

When describing reputation models and systems using our visual grammar, it is tempting to just take all the elements and plug them together in the simplest possible combinations to create an Amazon-like ratings and review system or a Digg-like voting model or even a points-based karma incentive model as seen on StackOverflow - in practice the simple implementation of these systems is fraught with peril. This section will describe several pitfalls that need to be addressed when considering the design of reputation models as the get deployed in the wild, where people with myriad personal incentives interact with them both as sources of reputation and as consumers.

The Power and Costs of Normalization

We make much of normalization in this book. Indeed, for almost all reputation models we outline, the calculations are all done with numbers that range from 0.0 to 1.0, even when it seems like normalization and denormalization might seem like extraneous steps. Here are the reasons normalization is an important powerful tool for reputation:

- Normalized Values are Easy to Understand

- Normalized claim values are always in a fixed, well understood range. When applications read your claim values from the reputation database, they know that 0.5 means the middle of the range. Without normalization, reading a claim value of 5 is ambiguous - it could mean 5 out of 5 stars, 5 out of 10 point scale, 5 thumbs up, 5 votes out of 50, or 5 points.

- Normalized Values are Portable (Messages and Data Sharing)

- Probably the most compelling reason to normalize your claim values in your reputation statements and messages is that the data is portable across the various display contexts (see Chapter_8 ) and can reuse any of the rollup process code in your reputation sandbox that accepts and outputs normalized values. Other applications would not require special understanding of your claim values in order to interpret them.

- Normalized Values are Easy To Transform (Denormalize)

- Converting a normalized score to a percentage by multiplying it by 100. To any scalar value by using a table or performing a simple multiplication. For example converting to a 5 star rating system could be as multiplying by 20. Also, this allows the input to be of one type, such as a thumb-up (1) or thumb-down (0), but the normalized aggregate result may end up being represented as a percentage (0 - 100%) or a turned into a three-point scale of thumb-up (0.66 - 1.0), thumb-down (0.0 - 0.33), or thumb-to-side (0.33 - 0.66). Using a normalized score allows this conversion to take place at display time without committing the converted value to the database. Also, the exact same values might be denormalized by different applications with completely different needs.

As with all things, the power of normalization comes with some costs:

- Combining Normalized Scalar Values Introduces Bias

- Using different normalized numbers in large reputation systems can cause unexpected biases when the original claim types were scalars with slightly different ranges. Averaging normalized maximum 4-star ratings (25% each) with maximum 5-star ratings (20% each) causes the scores to clump up when the average denormalized back to 5 stars due to rounding errors. See the example table - XREF HERE -

| Scale | 1 Stars norm'd | 2 Stars norm'd | 3 Stars norm'd | 4 Stars norm'd | 5 Stars norm'd |

| 4-Stars | 25 | 50 | 51-75 | 76-100 | //N/A// |

| 5-Stars | 20 | 40 | 41-60 | 61-80 | 81-100 |

| Averaged Range / Denorm'd | 0-22 /      | 23-45 /      | 46-67 /      | 68-90 /      | 78-100 /      |

Liquidity: You won't get enough input

A question of liquidity -

When is 4.0 > 5.0? When enough people say it is!

Consider the following problem with simple averages: it is mathematically unreasonable to compare two similar targets with averages made from significantly different numbers of inputs. For the first target say there only three (3) ratings averaging 4.667 stars which displays rounded as

and you compare that average score to a target with a much greater number of inputs, say five-hundred (500), with an averaging 4.4523 stars which displays as only

and you compare that average score to a target with a much greater number of inputs, say five-hundred (500), with an averaging 4.4523 stars which displays as only

. The second target better reflects the true consensus of the inputs. In fact, the target with the lower average is most likely the better of the two, since there just isn't enough information on the first target to be sure of anything. Most simple average displays that have too few inputs shift this problem on to the users by displaying the simple average and also providing the number of inputs, usually in parenthesis, next to the score, like this

. The second target better reflects the true consensus of the inputs. In fact, the target with the lower average is most likely the better of the two, since there just isn't enough information on the first target to be sure of anything. Most simple average displays that have too few inputs shift this problem on to the users by displaying the simple average and also providing the number of inputs, usually in parenthesis, next to the score, like this

(142) .

But, fawning the interpretation of averages off to the user doesn't help when attempting to rank targets based on averages - a lone

rating on a brand new item will put a new item at the top of any ranked results it appears in. This is inappropriate and you need to compensate for this effect.

rating on a brand new item will put a new item at the top of any ranked results it appears in. This is inappropriate and you need to compensate for this effect.

The following liquidity compensation algorithm is provided as a method for offsetting this variability for very small sample sizes and is used several places in Yahoo! where a there is are a large number of new targets added daily and therefore there are often a very few ratings applied to each one.

Implement a score that will adjust the ranking of an entity based on the quantity of ratings. Ideally, this method should be calculated on the fly and does not require additional storage.

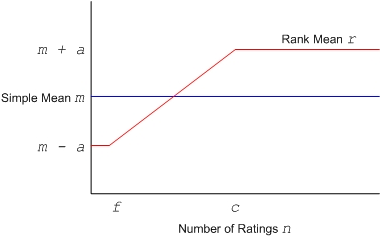

RankMeanr = SimpleMean m - AdjustmentFactor a + LiquidityWeight l * Adjustment Factor a

LiquidityWeightl = min(max((NumRatings n - LiquidityFloor f) / LiquidityCeiling c), 0), 1) * 2

- Or

r = m - a + min(max((n - f) / c), 0.00), 1.00) * 2.00 * a

This produces a curve seen in Figure_4-1 . Though one might imagine a more mathematically continuous curve might be a appropriate, this linear approximation can be done with simple non-recursive calculations and requires no knowledge of previous individual inputs.

Suggested initial values for a , c , and f (assuming normalized inputs):

AdjustmentFactor- a = 0.10

This is 10% of a normalized input score. For many applications, such as 5 star-ratings it is within integer rounding error - in this example, if the AdjustmentFactor set much higher, this value will cause confusion when lots of four-star entities are ranked before five-star ones. If set too much lower, this may not have the desired effect.

LiquidityFloorf = 10

This is the threshold for which we consider the number of inputs required to positively effect the rank. In an ideal environment this number should be between five (5) and ten (10), and based on our experience with large systems, should never be set lower than three (3). Higher helps with abuse mitigation and for getting better representation the consensus of opinion.

LiquidityCeilingc = 60

This is the threshold after which additional inputs will not get a weighting bonus. In short, we trust the average to be representative of the optimum score. This number must not be lower than 30, which in statistics is the minimum required for a t-score. Note that the t-score cutoff is 30 for data that is assumed to be un-manipulated (read: random.)

You are encouraged to consider other values for a , c , and f , especially based on any data you have about the characteristics of your sources and their inputs..

Bias, Freshness and Decay

When computing reputation values from user generated ratings, there are several common psychological and chronological issues that will likely appear in your data. Often, the data will be biased because of the cultural mores of your audience or simply the way you are gathering and sharing the reputations, such as always favoring the display previously highly-rated items. It may also be stale, because the nature of the target being evaluated is no longer relevant - for example, the ratings for a specific model digital camera's features, such as the number of pixels in each image, may be irrelevant in just a few months time as the technology advances. There are numerous solutions and workarounds for these problems, one of which is to implement a method to decay old contributions to your reputations. Read on for an explication of these problems and what you can do about them.

Ratings Bias Effects

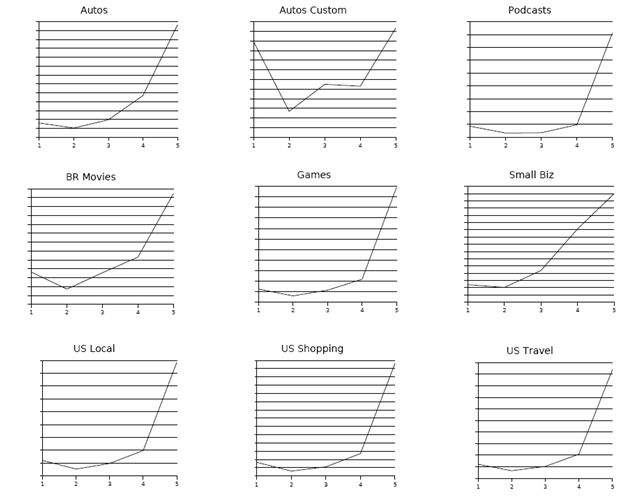

Figure_4-2 shows the graphs of 5-star ratings from nine different Yahoo! sites with all the volume numbers redacted. We don't need them, since we only want to talk about the shapes of the curves

Eight of these graphs have what is known to reputation system aficionados as J-curves- where the far right point (5 Stars) has the very highest count, 4-Stars the next, and 1-Star a little more than the rest. Generally, a J-curve is considered less-than ideal for several reasons: The average aggregate scores all clump together between 4.5 to 4.7 and therefore they all display as 4- or 5-stars and are not-so-useful for visually sorting between options. Also, this sort of curve begs the question: Why use a 5-point scale at all? Wouldn't you get the same effect with a simpler thumbs-up/down scale, or maybe even just a super-simple favorite pattern?

The outlier amongst the graphs is for Yahoo! Autos Custom (which is now shut down) where users were rating the car-profile pages created by other users - has a W-curve. Lots of 1, 3, and 5 star ratings and a healthy share of 4 and 2 star as well. This is a healthy distribution and suggests that “a 5-point scale is good for this community”.

But why was Autos Custom's ratings so very different from Shopping, Local, Movies, and Travel?

The biggest difference is most likely tat Autos Custom users were rating each other's content. The other sites had users evaluating static, unchanging or feed-based content in which they don't have a vested interest.

In fact, if you look at the curves for Shopping and Local, they are practically identical, and have the flattest J hook - giving the lowest share of 1-stars. This is a direct result of the overwhelming use-pattern for those sites: Users come to find a great place to eat or vacuum to buy. They search, and the results with the highest ratings appear first and if the user has experienced that object, they may well also rate it - if it is easy to do so - most likely will give 5 stars (see Chap_4-First_Mover_Effects ). If they see an object that isn't rated, but they like, they may also rate and/or review, usually giving 5-stars - otherwise why bother, it so that others may share in their discovery. People don't think that mediocre objects worth the bother of seeking out and creating internet ratings. So the curves are the direct result of the product design intersecting with the users goals. This pattern - I'm looking for good things so I'll help others find good things - is a prevalent form of ratings bias. An even stronger example happens when users are asked to rate episodes of TV shows - Every episode is rated 4.5 stars plus or minus .5 stars because only the fans bother to rate the episodes, an no fan is ever going to rate an episode below a 3. Look at any popular running TV show on Yahoo! TV or [another site].

Looking more closely at how Autos Custom ratings worked and the content was being evaluated showed why 1-stars were given out so often: users were providing feedback to other users in order to get them to change their behavior. Specifically, you would get one star if you 1) Didn't upload a picture of your ride, or 2) uploaded a dealer stock photo of your ride. The site is Autos Custom, after all! The 5-star ratings were reserved for the best-of-the-best. Two through Four stars were actually used to evaluate quality and completeness of the car's profile. Unlike all the sites graphed here, the 5-star scale truly represented a broad sentiment and people worked to improve their scores.

There is one ratings curve not shown here, the U-curve, where 1 and 5 stars are disproportionately selected. Some highly-controversial objects on Amazon see this rating curve. Yahoo's now defunct personal music service also saw this kind of curve when introducing new music to established users: 1 star came to mean “Never play this song again” and 5 meant “More like this one, please”. If you are seeing U-curves, consider that the 1) users are telling you something other than what you wanted to measure is important and/or 2) you might need a different rating scale.

First Mover Effects

When working with quantitative measures based on user input, whether it be ratings or measuring participation by counting the number of contributions to a site, there are several issues that arise as the result of bootstrapping a community that we group together under the name first mover effects:

- Early Behavior Modeling and Early Ratings Bias

- The first people to contribute to an application have a disproportionate effect on the character and future contributions of others. After all, this is social media, and usually people are trying to fit into any new environment. For example, if the tone of comments is negative, new contributors will also tend to be negative, which will also lead to bias in any user generated ratings. See Chap_4-Ratings_Bias_Effects . etc.

When introducing user generated content and associated reputation systems, it is important for the operator to take explicit special steps to model behavior for the earliest users in order to set the pattern for those who follow.

- Discouraging New Contributors

- Special care must be taken with systems that contain leaderboards (see Chap_8-Leaderboard ) when used either for content or for users as items displayed on leaderboards tend to stay on the leaderboards because the more people see those items and therefore click, rate, and comment on with them disproportionately, creating a self-sustaining feedback loop. This keeps newer items and users from breaking into the leaderboards, it also discourages new users from even making the effort to participate, thinking they are too late to influence the result in any significant way. Though this is true for all reputation scores, even for a digital camera, this problem is particularly acute in the case of simple point-based karma systems, where users are given ever-increasing points for activity and the leaders over years of feverish activity amass literally millions of points, making it literally mathematically impossible for new users to ever catch up.

Freshness and Decay

Time leeches value from reputation: Chap_4-First_Mover_Effects discussed how simple reputation systems grant early contributions are disproportionately valued over time, but there's also the simple problem that ratings become stale over time as their target reputable entities change or become unfashionable - businesses change ownership, technology becomes obsolete, cultural mores shift.

The key insight to dealing with this problem is to remember the expression: “What did you do for me this week?”. When considering how reputation is displayed and used indirectly to modify the user's experience, remember to account for time-value. A common method for compensating for time in reputation values is to apply a decay function to them: have the older reputations lose value as time goes on, at a rate that is appropriate to the context. For example, digital camera ratings for resolution should probably lose half their weight every year, where a restaurant review may only lose ten percent of its value in the same interval.

Here are some specific algorithms for decaying a reputation score over time:

- Linear Aggregate Decay

- Every score in the corpus is decreased by a fixed percentage per unit time elapsed, whenever it is recalculated. This is high performance, but scarcely updated reputations will have dispassionately high values. To compensate a timer input can perform the decay process at regular intervals.

- Dynamic Decay Recalculation

- Every time a score is added to the aggregate, recalculate the value of every contributing score. This provides a smoother curve, but tends to become computationally expensive O(n2) over time.

- Window-based Decay Recalculation

- Yahoo! Spammer IP reputation used a time-window based decay calculation: fixed-time or fixed-size window of previous contributing claim values is kept with the reputation for dynamic recalculation when needed. New values push old values out of the window and the aggregate reputation is recalculated from those that remain. This method produces a score with the most recent information available, but the information for low liquidity aggregates may still be old.

- Time Limited Recalculation

- This is the de facto method that most engineers use to present any information in an application: all of the ratings in a time range from the database and compute the score just in time. This is the most costly method as it involves always hitting the database to consider an aggregate reputation (say, for a ranked list of hotels) , when 99% of the time the value is exactly the same as it was the last time it was calculated. This method also may throw away still contextually valid reputation. We recommend trying some of the more per formant suggestions above.

Implementers notes

Most of the computational building blocks, such as the Accumulator, Sum, and even Rolling Average were implemented both in the reputation model execution environment and in the database layer because it provided important performance improvements is the read-modify-write code for stateful value was kept as close to the data store as possible. For small systems, it may be reasonable to keep the entire reputation system in memory at once, thus avoiding this complication. But, be careful! If your site is as successful has you hope it might someday be, making an all-memory based design may well come back to bite you, hard!