A Grammar for Reputation

A Graphical Grammar

The expression reputation system describes a wide array of practices, technologies, and user interface elements. This chapter will help you understand this by providing a comprehensive lexicon of attributes, processes and presentation that we will use going forward to describe current systems and to define new ones.

Much of the terminology surrounding reputation in the current marketplace is inconsistent, confusing, and even contradictory depending on what site you visit or what expert you read. After evaluating and developing scores of online and offline reputation systems for more than 30 years, the authors have been able to identify many concepts and attributes in common between them - sufficient similarity to brave proposing a common lexicon and graphical grammar in order to build a shared foundation of understanding. The hope is that work will raise the bar of quality for all future reputation systems and to help prevent foreseeable errors that might sabotage otherwise successful deployments.

There are many terms associated with the to describe and analyze the relations between concepts and attributes in a specific domain of knowledge including modeling languages, meta-modeling, ontologies, taxonomies, grammars, and patterns. Though we like to call our a graphical grammar, this excerpt from the Wikipedia entry for meta-modeling describes what we're hoping to accomplish with this section, and indeed this entire book - a consistent and standard framework for describing reputation models and systems.

Meta-modeling

… a formalized specification of domain-specific notations … following a strict rule set.

When describing this reputation systems grammar - the common concepts, attributes, and methods involved - we will borrow metaphors from basic chemistry: atoms [reputation statements] and their constituent particles [sources, claims, targets] are bound with forces [messages and processes] to make up molecules [reputation models], which comprise the core useful substances in the universe. Sometimes these molecules are mixed with others in solutions [reputation systems] to create stronger, lighter or otherwise more useful compounds than would normally occur randomly.

This metaphor will only be used in this chapter as a kind of intellectual scaffolding as we introduce the grammar. We opted to use it because we're not going to start at the top or the bottom of the conceptual space, but somewhere in the middle.

We will start with our atom - the reputation statement - and describe a grammar sufficient for understanding and diagramming common reputation systems deployed today as well as provide you with the tools to design your own.

<note>The reputation systems graphical grammar is a work that is constantly undergoing change, so be sure to visit this book's companion wiki http://buildingreputation.comfor up-to-date information and to participate in it's evolution..

</WRAP>

Atoms and Particles: The Reputation Statement and its Components

When attempting to deconstruct the known universe of online reputation systems, we observed that there was a single common element of reputation that was always the result of these calculations: the reputation statement. As we proceed with diagramming, you will notice that these systems compute many different reputation values that turn out to possess this same elemental form. In practice, many of the most prevalent inputs are also in the same format as well. Just as matter is made up of atoms, reputation is made up of reputation statements.

These atoms are always of the same basic shape, but vary in their specific details. Some are about people, some are about products. Some are numeric, some are votes, some are comments. Many are created directly by users, but a surprising share are created by software.

In chemistry the nature of a single atom of matter always has certain particles (Electrons, Protons, and Neutrons) but in different configuration and number based upon its elemental formulation. The exact formulation of particles in an element causes specific properties when observed in en masse in nature: an element may be stable or volatile, it may be gaseous or solid, and it may be radioactive or inert, amongst many other properties. But every object with mass is made of these atoms.

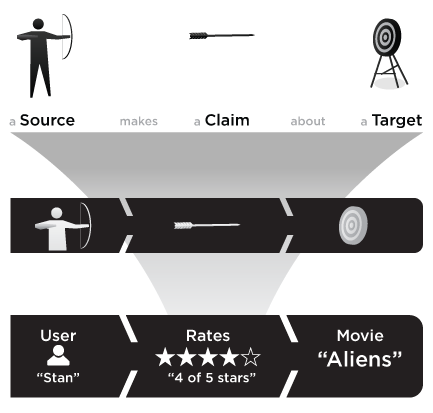

The reputation statement is like an atom in that it too it has constituent particles: a source, a claim, and a target. See Figure_2-1 . The exact characteristics (type and value) of each of these particles determines its element type and it's utility to your application.

Reputation Sources: Who [or what] is making a claim?

Every reputation statement is made by someone or something; otherwise, it is impossible to evaluate the claim. “Some people say product X is great.” is meaningless, or at least it should be. Who are “some people”? Are they like me? Do they work for the company that makes product X? Without knowing something about who or what made a claim, it has little use.

- Entity

- An entity is any object that can be the source or target of reputation claims. These must always have a unique identifier and are often a key from an external database.

- Source

- The source is an entity that made a reputation claim. Though sources are often users, there are several other common sources: input from other reputation models, customer care agents, log crawlers, anti-spam filters, page scrapers, 3rd party feeds, recommendation engines, and roll-ups (see Chap_2-Roll-ups ).

- User [as Source]

- Users are probably the most well known source of reputation statements, a user represents the digital personification of a single person's interactions with a reputation system. Users are always formal entities, and may have reputations attached either that they were the source for or the target of (see Chap_2-User_as_Target ).

Reputation Claims: What is the target's value to the source? On what scale?

The claim is the value that was assigned in a statement by the source to the target. Each claim is of a particular score class and has a score value. Figure_2-1 shows a 5-star rating as a score class, and this particular reputation statement has the score value of 3 (stars).

- Score Class

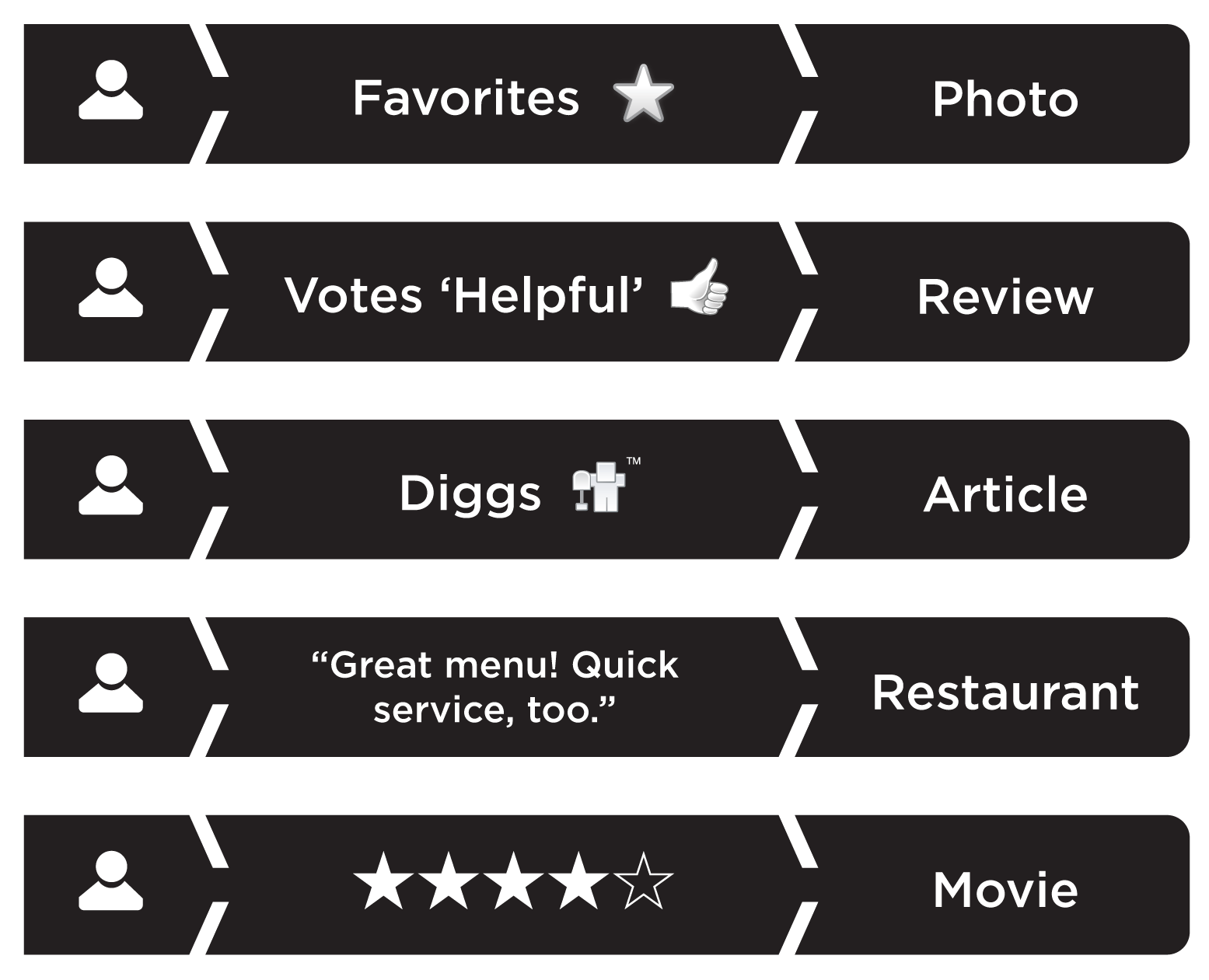

- What kind of score is this claim? Is it quantitative (numeric) or qualitative (free-form)? How should it be interpreted? What code class will be used to normalize, evaluate, store and display this score? See Chap_2-Score_Classes for a list and Figure_2-2 .

- Quantitative or Numeric Claims

- Numeric or Quantitative scores are what most people think of as reputation, even if it is displayed as letter grades or thumbs or stars or percentage bars. Computers understand numbers so most of reputation systems' complexity, and the detail in this book, surrounds managing these score classes. Examples of common numerical score classes are accumulators, votes, segmented (i.e stars), enumerations, and Chap_2-Roll-ups .

- Qualitative Claims

- Any reputation information that can't be readily parsed by software is called qualitative, but plays a critical role in helping people determine the value of the target. If you think about a typical ratings & reviews site, the text comment and the demographics of the author of the review sets important context for understating the accompanying 5-stars rating. Common qualitative scores include blocks of text, videos, URLs, photos, and author attributes.

- Raw Score

- The score is stored in raw form - as the source created it. Since normalization may cause some precision loss, it is often desirable to keep the original value for computation.

- Normalized Score

- Numeric scores should be converted to a normalized scale, such as 0.0-1.0 in order to mix their values with others in a reputation model and provide support for de-normalizing to another display score type. A normalized score is often easier to read, since we're trained to understand the 0-100 range from our youth.

Reputation Targets: What (or who) is the focus of a claim?

Reputation statements are always focused on some unique identifiable entity-the target of the claim. When queries are made of the reputation database, the target identifier is usually specified when the detail for the target entity in question, say a new eatery, is displayed: “Yahoo! users rated Chipotle Restaurant 4 out of 5 stars for service”. The target is left unspecified (or only partially specified) in database requests based on claims or sources: “What is the best mexican restaurant near here?” or “What are the ratings that Lara gave for restaurants?”

- Target aka Reputable Entity

- Any entity that is the target of reputation claims. Examples of reputable entities: users, movies, products, blog posts, videos, tags, guilds, companies, IP addresses, etc. Even other reputation statements, such as movie reviews, can be a reputable entities when people evaluate it as helpful, or not.

- User as Target aka Karma

- When a user is the reputable entity target of a claim, we call that karma. Care must be taken when generating karma, as there is a real, living, breathing, feeling, and thinking human being at the business end of these scores. There are many uses of karma, most are corporate and simple, but more well known, like eBay feedback scores, are public and complex. See Chap_8-Displaying_Karma

- Reputation Statement as Target

- Reputation statements themselves are commonly the target of other reputation statements that refer to them explicitly. See Chap_2-Containers_and_Targets for a full discussion.

Molecules: Constructing Reputation Models using Messages and Processes

Just like molecules are often made up of many different atoms in various combinations to produce materials with unique and valuable qualities, what makes reputation models so powerful is that they aggregate reputation statements from many sources and often of differing types. Instead of concerning ourselves with valence and Van der Waals forces, in reputation models we bind the atomic units - the reputation statements - together with messages and processes. Messages are represented by arrows and flow in the direction indicated. The boxes contain descriptions of the processes that interpret the activating message to update a reputation statement and/or send one more messages onto other processes. As in chemistry, the entire process is simultaneous - messages can be coming in at any time and may be taking different paths through a complex reputation model at the same time.

- Reputation Model

- A reputation model describes all of the reputation statements, events, and processes for a particular context. Usually, this is for a single type of reputable entity.

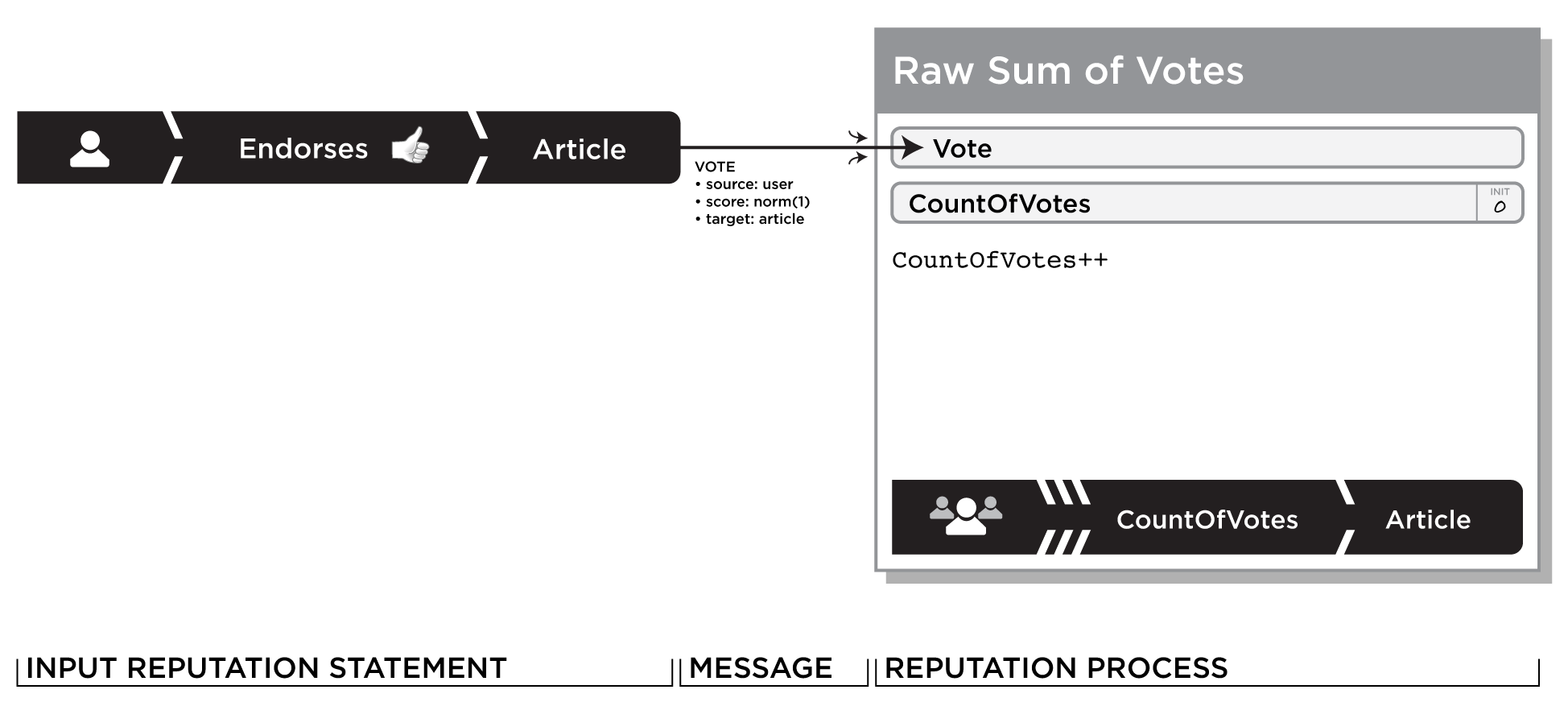

Yahoo! Local, Travel, Movies, TV, etc. are all examples of the Ratings and Reviews reputation models. eBay's seller feedback model of users rating transactions and having those reflected on the sellers profile is a Karma reputation model. The example in Figure_2-3 is one of the most simple models possible and was inspired by the digg it vote-to-promote reputation model (see Chapter_7 ) made popular at Digg.com.

- Reputation Context

- The reputation context is the category of relevance for a specific reputation. By definition this limits the re-use of the reputation to related contexts. A high ranking in Yahoo! Chess doesn't really tell you anything about if you should buy something from that user on eBay, but might tell you something about how committed they are to board gaming tournaments. See Chap_1-Always_Contextual and Chap_1-FICO for a deeper consideration of the limiting effects of context.

Messages & Processes

- Reputation Message

- Represented by the flow lines in are reputation model diagram, reputation messages carry information to a reputation process for some sort of computational action. These are often in the form of a reputation statement: a source, (normalized) claim score, and target identifier in order to encourage process reuse, but it is not a requirement and sometimes supplemental information is required. These messages may come from other processes, explicit user action, or external autonomous software. Don't confuse the messages sending party with the reputation source - they are most often unrelated.

- Input Event [a reputation message]

- As a matter of convention, the initial messages - those that start the execution flow of a reputation model - are called input events and are shown at the start of the model diagram - either the left side for landscape-oriented diagrams or at the top in portrait-oriented ones. Input events are said to be transient when there is no need for the reputation message be undone or referenced in the future. Transient input events may not be stored. If the input event may need to be displayed or reversed in the future, as is often the case when users abuse a reputation model, it is necessary to store it either in an external file, such as a log, or as a stored reputation value. Most user generated rating and review models do this anyway, but very large scale systems, such as email spammer reputation systems, can't afford to store a separate input event for every received email.

- Reputation Process

- Messages are delivered to one or more reputation processes are represented by the large boxes in reputation model diagrams. These processes normalize, transform, store, decide how to route new messages, or most often calculate something using the message parameters. In short, they provide the bulk of code and is what make the model unique. See Chapter_3 for more detail on code modularity in an actual implementation.

- Stored Reputation Value

- Many reputation processes use message input to transform a reputation statement. In our example Figure_2-3 , when a user clicks on “I Digg this URL”, the application sends the input event to a reputation process that is a simple counter. The counter a stored reputation value, in this case, in the form of a reputation statement is loaded, then gets incremented by one and then is stored again. At this point, the reputation database can be queried by the target identifier (URL) to get the score.

- Roll-ups

- This book talks about a specific kind of stored reputation value we call the roll-up. This is the generic term for any aggregated reputation score that takes multiple inputs over time or from multiple processes. Simple Average and Simple Accumulator are examples of roll-ups.

Figure_2-3 is the first of many reputation model diagrams that appear in this book. Each will have an accompanying descriptive section explaining each element in detail.

This model is called the Simple Accumulator: It counts votes for a target object. It could be counting click-throughs, thumbs-ups, or marking an item as a favorite.

Though most models have multiple input messages, this one only has one in the form of a single reputation statement:

- As users take actions, they cause Votes to be recorded and start the reputation model by sending them as a messages (represented by the arrows) to the Raw Sum of Votes process.

Likewise, models typically have many processes, this example only has one:

- Raw Sum of Votes: When Vote messages arrive, the

CountOfVotescounter is incremented and stored in a reputation statement with the claim type ofCounter, set to the value ofCountOfVotesand has the same target as the originating Vote. The source for this statement is said to be aggregate, because it is a roll-up- the product of many inputs from many different sources.

See Chapter_4 for a detailed list of common reputation process patterns and Chapter_7 and Chapter_8 for UI effects of various score classes.

Building on the Simplest Model

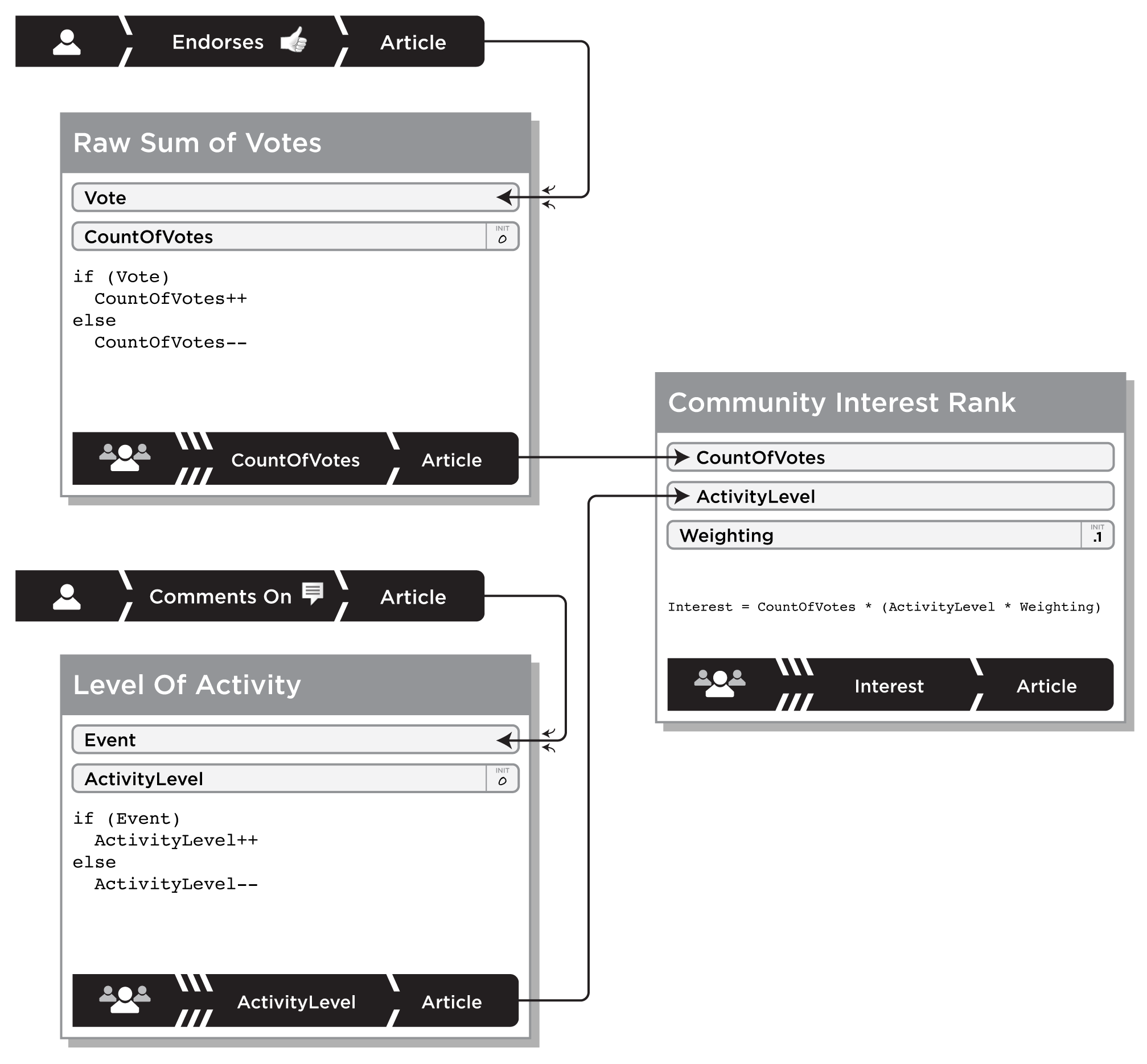

Figure_2-4 shows a fuller representation of a Digg.com-like vote-to-promote reputation model. This example determines community interest in an article by tabulating the number of votes it receives in addition to the level of activity in the form of counting the number of comments left by users about the article.

The input messages are in the form of two reputation statements:

- A User Endorses an Article: a thumbs-up vote represented as a 1.0 score if issued, a 0.0 score if withdrawn. This message is sent to the Raw Sum of Votes process.

- A User Comments On an Article: an activity indicator, a 1.0 score if the user either added a comment or a 0.0 if they deleted a comment. This message is sent to the Level of Activity process.

The reputation processes in this model are:

- Raw Sum of Votes: This process either increments - if the input is 1.0 - or decrements a roll-up reputation statement containing a simple accumulator called

CountOfVotes. It stores the new value back into the statement and sends it in a message to the Community Interest Rank process. - Level of Activity: This process either increments - if the input is 1.0 - or decrements a roll-up reputation statement containing a simple accumulator called

ActivityLevel. It stores the new value back into the statement and sends it in a message to the Community Interest Rank process. - Community Interest Rank: This process always recalculates a roll-up reputation statement containing a weighted sum called Interest, which is the value that the application will use to rank the target article in search results and in other page displays. The calculation makes use of a local constant -

Weighting- to combine the values ofCountOfVotesandActivityLevelscores disproportionately to each other - in this example an Endorsement is worth 10x the interest score of a single comment. The resultingInterestscore is stored in a typical roll-up reputation statement: aggregate source, numeric score, and target shared by all of the inputs.

Complex Behavior: Containers and Reputation Statements as Targets

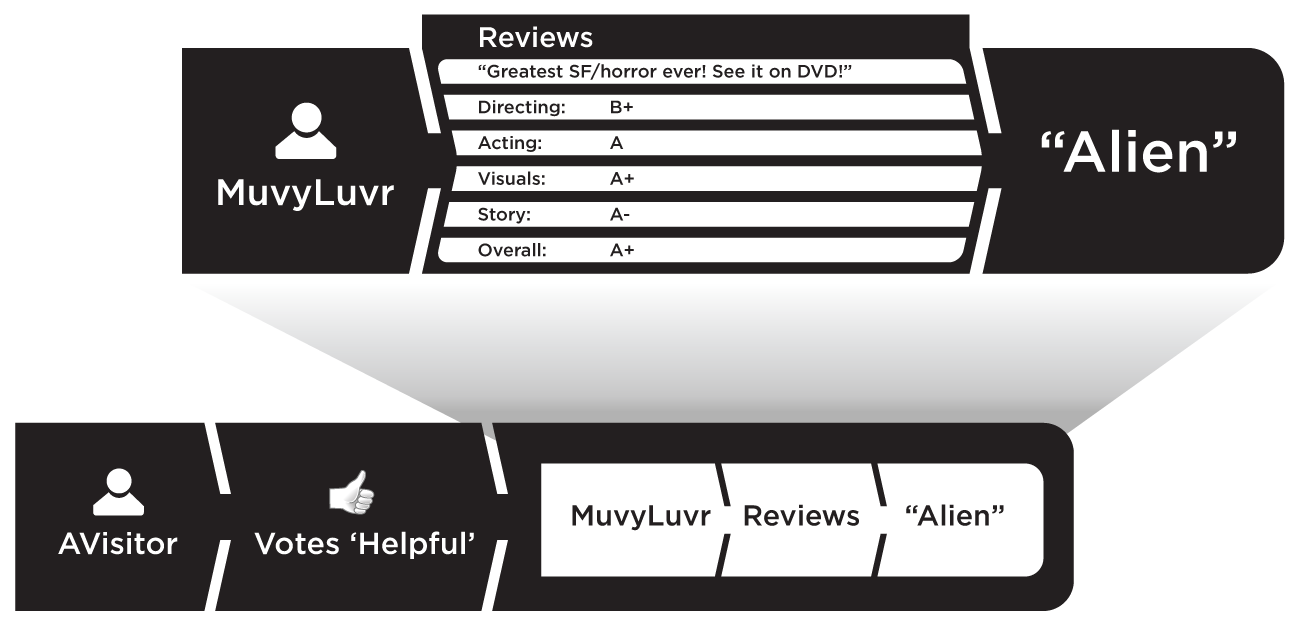

Just as there are some interesting looking molecules in nature and much like hydrogen bonds are especially strong, there special types of reputation statements called containers that join multiple closely-related statements into one super-statement. A common example of this is seen in most product and services websites with user written ratings and comments: a number of different star-ratings for a restaurant are clumped together with a text comment into an object formally called a review. See Figure_2-5 for a typical example.

Containers are useful devices for ordering reputation statements. While it's technically true that each individual component of the container could be represented and addressed as a statement of its own, this would be semantically sloppy, and lead to unnecessary complexity in your model. And it maps well to real-life. We typically don't think of Zary22's series of statements about Dessert Hut as a rapid-fire stream of individual opinions: no, we consider them related, and influenced by each other. Taken as a whole, they formulate Zary22's review of his experience.

<note>A container is a compound reputation statement with multiple claims all for the same source and target.

Once a reputation statement exists in your system, you might opt to make it a reputable entity itself as in Figure_2-6 This should seem perfectly natural to you-in real life, people don't just formulate opinions, right? Quite often, other people form opinions about those opinions! (“Well, Jack hatedThe Dark Knight, but he and I never see eye-to-eye anyway.”)

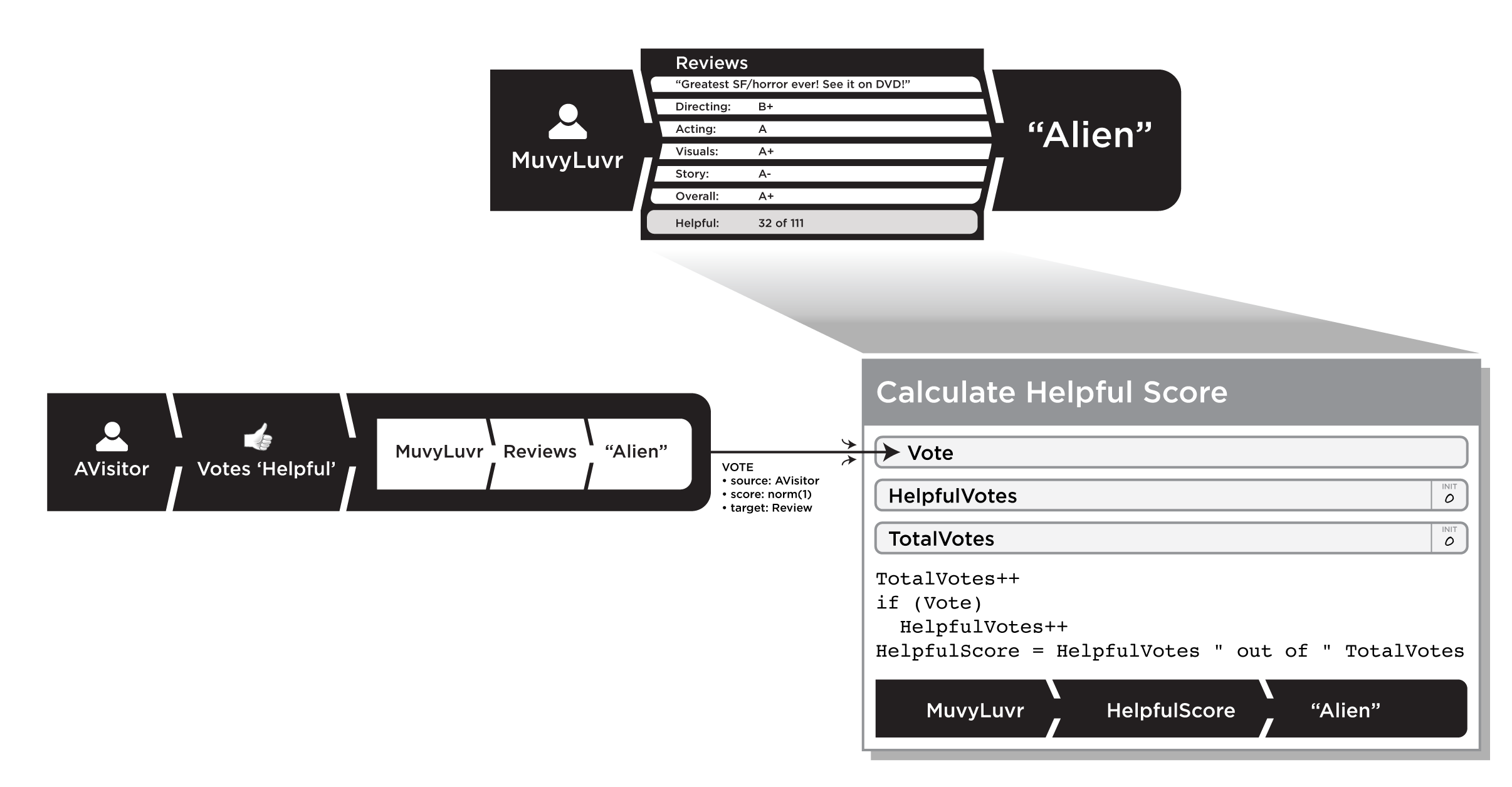

There is another feature of review based reputation systems, they often implement a form of built in user-feedback on reviews written by other users: we'll call this the Was this Helpful? pattern: Figure_2-7 . When a reader clicks on the thumbs-up stating that a review was helpful or not, the target is a review (container) written earlier by a different user.

The input message is in the form of a single reputation statement:

- A User Votes on the quality of another reputation statement, in this case a review: a thumbs-up vote is represented by a 1.0 value, and a thumbs-down by a 0.0 value.

There is only one reputation process in this model:

- Calculate Helpful Score: When the message arrives, the

TotalVotescounter is incremented. If the Vote is non-zero, the process incrementsHelpfulVotes. FinallyHelpfulScoreis set to a text representation of the score suitable for display - “HelpfulVotesout ofTotalVotes”. This representation is usually stored in the very same review-container that the Voter was judging (had targeted) as helpful. This is done to simplify indexing and retrieval, as in “Retrieve a list of the most helpful movie reviews by MuvyLuvr” and “Sort the list of movie reviews of Aliens by helpful score.” Though the original review writer isn't the author of his helpful votes, his review is responsible for, and should contain them.

You'll see variations on this simple pattern of reputation-statements-as-targets repeated throughout the book. It allows us to build some fairly advanced meta-moderation capabilities into our reputation systems. Now, not only can you ask the community “What's good?”-you can also ask it “… and who do you believe?”

Solutions: Mixing Models to make Systems - adding Karma

For our last chemistry metaphors, consider that physical materials are rarely made out of single type of molecule. We combine the molecules into solutions, compounds, and mixtures to get the exact properties we want. But, not all substances mix well - like oil and water - the same is true for combining multiple reputation model contexts into a single complex reputation system. Care must be taken that the reputation contexts of the models are compatible.

- Reputation System

- A reputation system is set of one or more interacting reputation models. For example, SlashDot.com which combines two reputation models - an entity reputation model of user's evaluations of individual message board postings - and a Karma reputation model for determining the amount of moderation opportunities granted to users to evaluate posts. It is a “the best users have the most control” reputation system. See Figure_2-8

- Reputation Sandbox

- The reputation sandbox is the execution environment for one or more reputation systems. It handles message routing, storing and retrieving statements and maintaining and executing the model processes. A specific implementation is described in Chapter_3 .

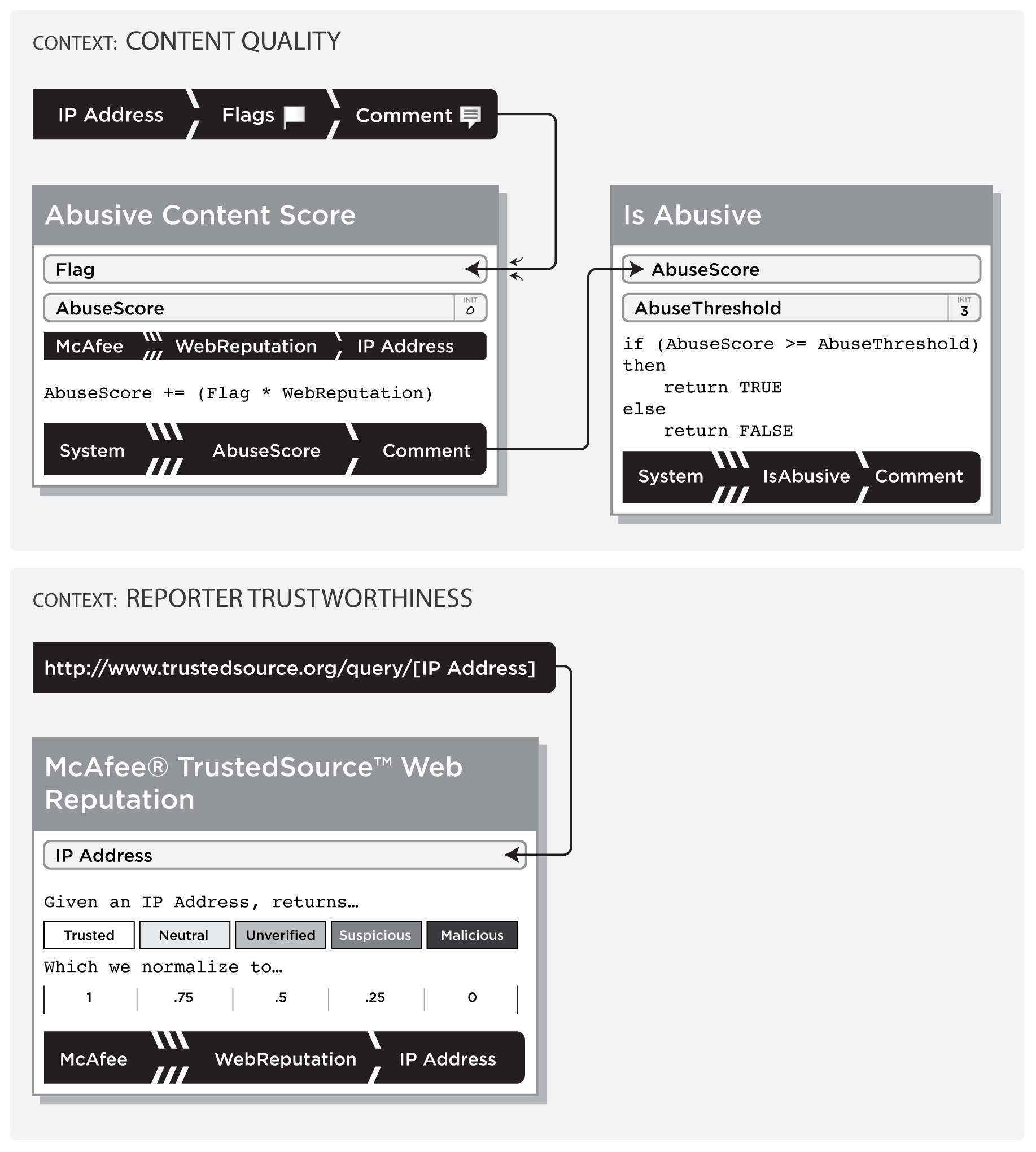

Figure_2-8 shows a simple abuse reporting system that is integrates two different reputation models, a Weighted Voting model that leverages an external karma system providing the weights for the IP addresses of the abuse reporters. This example also illustrates an explicit output, common in many implementations. In this case, the output is an event sent to the application environment suggesting the target comment should be dealt with.

For the Reporter Trustworthiness context the entire reputation model is opaque to our system because it is on a foreign service, namely TrustedSource.org by McAfee, Inc. There is only one input, and it is a bit different than in previous examples:

- When reputation system decides - perhaps by periodic timer or on-demand by external means - it needs to request a new trust score for a particular IP address, it retrieves the

TrustedSourceReputationas input using the web service API, here represented as a URL. The result is one of these categories:Trusted,Neutral,Unverified,Suspicious, orMaliciouswhich is passed to the Normalize IPTrustScore process.

The process represented here represents the transformation of the external IP reputation into the reputation system's normalized range:

- Normalize IPTrustScore: A transformation table is used to normalize the

TrustedSourceReputationintoWebReputationwith a range from 0.0 (no trust) to 1.0 (maximum trust). This is stored in a reputation statement with the source of TrustedSource.org, claim type Simple Karma with the scoreWebReputation, and the target of the IP address.

The main context of this reputation is Content Quality, which is designed to collect flags from users whenever they think that the comment in question violates the Terms of Service of the site. When enough users with a high enough reputation for their web provider flag the content, a special event is sent out of the reputation system. This is a Weighted Voting reputation model..

There is one input for this model:

- Input: A user, connected using a specific IP address, Flags a target comment as violating the sites Terms of service. The value of the flag is always 1.0 and is sent to the Abusive Content Score process.

There are two processes for this model, one to accumulate the total abuse score, and another to decide when to alert the outer application:

- The Abusive Content Score process uses one external variable:

WebReputationis stored in a reputation statement with the same target IP address as was provided with the flag input message. TheAbuseScorestarts at 0 and is increased by the value ofFlagmultiplied byWebReputationand is stored back into a reputation statement with an aggregate source, numeric score type, and the comment identifier as the target. This statement is passed in a message to the Is Abusive? process. - Is Abusive? then tests the

AbuseScoreagainst an internal constant,AbuseThresholdin order to decide if it needs to inform the application that the target comment requires special attention. In reputation sandbox implementations that wait for execution to complete, the result is returned as aTRUEorFALSEindicating if the comment is considered to be abusive. For high-performance optimistic, or fire-and-forget, reputation platforms, like the one described in Chapter_3 , only when the result is TRUE would an asynchronous alert be triggered.

With the introduction of multiple models, external variables, and results handling, our basic modeling grammar is complete.

If you'd like to dive right in to seeing real-world examples of models, peek ahead to Chapter_5 . If you're a bit more technical and want to know more about how to implement reputation sandboxes and how to deal with issues such as reliability, reversibility, and scale, continue on to Chapter_3 . Otherwise, move on to Chapter_4 , where we cover more detail about choosing what kind of reputation components and models are right for your specific application.